Understanding REF

On Thursday March 26th, a dozen colleagues from around BU participated in a seminar on REF and Impact Monitoring in the Social Sciences. The Impact session organised by Professor Ann Brooks from HSC was led by Sociology Professor John Scott, an active academic citizen and prolific writer, often at the cutting edge of his field. Professor Scott has served on the Sociology RAE/REF panel for the past 15 years of exercises, as well as overseeing a submission for Plymouth University.

Professor Scott started the seminar with a brief history of the development of research assessment, setting the exercise in the context of our ‘audit culture’ of regulation in academia. As the REF changed once more for the 2014 cycle, the big question we now face is: how will the ‘impact agenda’ influence and shape research practice?

Speaking of the challenges of creating REF input plans, Scott discussed the personal cost that comes with making strategic decision of who should be included in what subject area. People doing very strong research can end up excluded from a submission because they are not a good fit with the university’s strategy for putting units together. Taking seriously the effects of this interpersonal dimension of REF planning should be incorporated throughout the process.

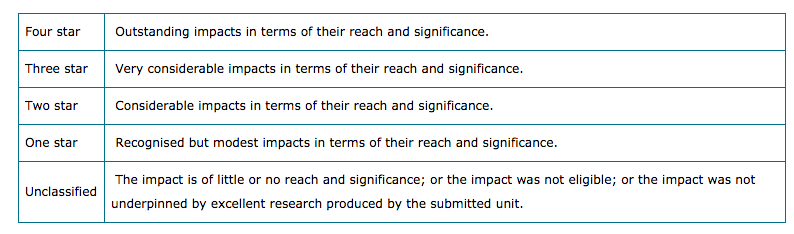

Scott summarised the profiling system used in REF 2014 that is likely to run again for REF 2020. Panels judge Outputs (65%), Environment (15%) and Impact (20%). He explained that the process works similar to exam second marking. After rating a sample of submissions from across these profile elements, panel members met to look for patterns and reach consensus on the reasoning behind allocating 3* and 4* marks. This was done across subject areas, for example, trying to compare an architect’s built shed and a medical researcher’s new diagnostic system.

Scott reinforced that citation indexes were not the driving factor in rankings. While citation indexes might be discussed as evidence, there is a clear understanding now with HEFCE that high citations can be because a paper is great—or terrible.

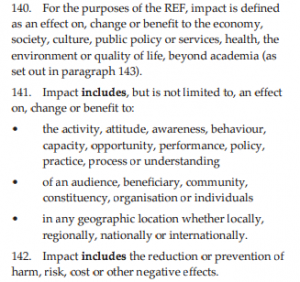

Impact in REF 2014

With the new impact component for REF 2014, universities submitted one case study for every 8 academics, with a minimum of 2 case studies needed for any submission unit. Alongside the Case Study comes an Impact Statement on strategy and approach to impact, which is equally assessed. Tracing some of the history of the rise of the impact agenda, Scott noted the role government expectations played and the fuzziness of the definition of impact.

As this was people’s first go at writing impact statements, universities took very different approaches. The retrospective collection of evidence often led to an ‘impact as add-on’ and the top-down approach to grouping together impact case studies. With more planning time for REF 2020, it is important to take a more systematic and dynamic approach to grouping case studies.

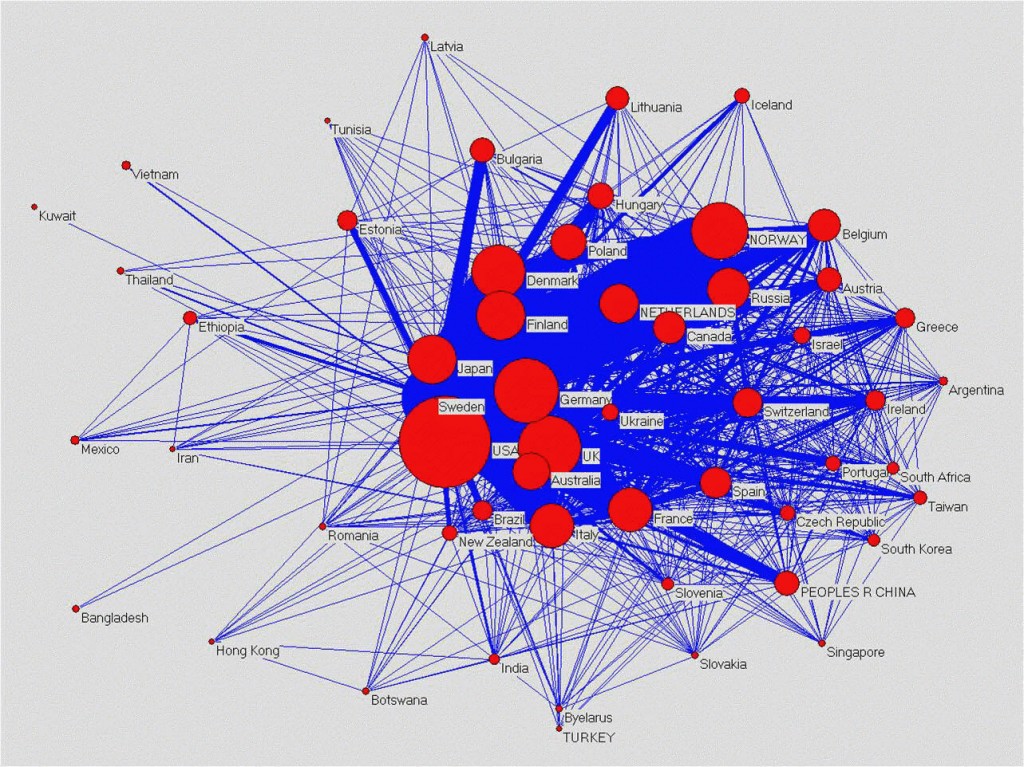

Scott emphasised that economic impact is only one aspect of impact. Cultural and Societal value does not need to be measured in financial terms. Panels in the Social Sciences and Humanities understand that impact has different definitions and overlaps between spheres. He highlighted that impact can be on the local, regional, national or international level.

For REF 2014, people tended to play it safe, going for the most obvious and easy to measure kinds of impact. It was difficult to find a balance between offering guidance and keeping things open so people did not feel constrained. Offering the example of a case study on conversation analysis, Scott discussed how researchers’ documented the ways their work was taken up by people working with pension claimants to improve relationships and workplace effectiveness.

Scott described how impact is assessed in terms of reach and significance. ‘Reach’ is not a reference to geographical scope, but how many people in a given area of impact you reach. For significance the assessment is for ‘how much’ something changed—a policy, a practice, a mode of delivery — but this is still a qualitative judgement.

Demonstrating Impact

In the second half of the seminar we moved to discuss methods of collecting and demonstrating impact in our research. Scott opened the conversation with another exam metaphor—panel members can only assess what is on the page.

Unlike outputs that you can carry across institutions, impact case studies must be for research undertaken in your submitting institution. While this can be hard for researchers changing home universities, for universities it means that, in principle, impact for former, retired or deceased colleagues can be counted. This was a big issue in REF 2014 and may arise in the consultations for REF 2020.

For REF 2020 it is likely that Jan 1st 2000 will be the start date of the research period, with impact demonstration assessed from January 1st 2014. This means that for the next REF esearch we have already conducted may be evaluated for impact, though there is room to include ‘emerging impact’ for later research.

Scott emphasised the need to have mechanisms in place for collecting evidence of impact. For REF 2014, researchers largely collected this on their own, but for REF 2020, it is important that institutions support this process, in addition to it being built into the research project.

In assessing impact, REF panels are looking for three key aspects:

- Quality underpinning research: the 2* threshold

- Significance and reach of impact: the evidence

- Compelling narrative linking research

The different time frames for outputs and impact means that the (1) underpinning research being evaluated is often not the same as your research outputs. In relation to what ‘counts’ as underpinning research we discussed nontraditional research from both ends of the spectrum—research done under commercial or government secrecy agreements, and research done in entirely open access and digital forums. For REF 2014 these cases were dealt with on an ad-hoc problem-solving basis. For REF 2020, if people become more adventurous in their submissions, more dynamic modes of assessing impact will be needed.

For all submissions, Scott noted the importance of disentangling research processes from research impact. For researchers engaged in participatory and action research methods, this means explicitly linking the background academic context and theoretical basis of your research, separately from the purely applied aspects. It becomes very important to demonstrate both how your new academic research is innovative and how you evaluated its impact (i.e. carrying out an impact assessment within your own project).

In relation to (2) Scott discussed the ways you can use either qualitative or quantitative forms of evidencing impact—anything from press reports and media coverage, to a survey carried out independently, to letters of support from policymakers or industry. You can also track feedback and comments you have gotten from readers, as well as hits on your website. These are a starting place for evidencing impact and the kinds of things that institutional support will be helpful for.

This is also where (3) narrative is so important. With impact, you are telling a story that goes from the academic context of your research through to the demonstrable effects your research has had beyond academia.

Key Questions the REF 2014 panels asked about impact were:

- What was the research activity: a person, a project, or a programme?

- Was research actually carried out at the university?

- Was the research carried out within the time period allowed?

- Did the impact occur within the time period allied?

- Did the research actually contribute to the impact?

- Is there supporting evidence for the impact?

While research outputs in the Social Sciences and Humanities are often ‘owned’ by an individual or small groups of co-authors, impact can be linked to broader programmes and groups. Your impact narrative should connect your contribution to a larger project or programme’s impact.

For REF 2014, impact strategies were sometimes weak or poorly demonstrated. There were also challenges tying impact statements to institutional support mechanisms. The panel was looking for narratives—stories that demonstrated how support mechanisms supported impact, and how those mechanisms could be built upon in the future.

REF 2020 – Going Forward

For the next REF, HEFCE is looking into refining impact assessment. This includes their analysis of measurement tools, expansion of impact criteria, and reflection on how to best operationalise these.

As universities devise institutional support mechanisms for collecting evidence of impact, here are some practical things you can do:

- Look for reviews that make reference to your research

- Track website visits, press appearances, public forms of citations

- Keep a folder of emails you receive from outside academia mentioning how your work is being taken up

- Make sure to devise mechanisms for tracking impact into your research projects from the start (surveys, feedback)

Returning to strategy to finish off the session, Professor Scott reminded us that not everyone has to be putting out 4* outputs and 4* impact case studies! When organising for REF 2020 it will be important to embrace and support the diversity of our colleagues’ strengths.

FOR MORE ON REF REFLECTIONS & HEFCE READ: http://blogs.bournemouth.ac.uk/research/2015/03/26/ref-update-hefces-reflections-event-25-march-2015/

From Sustainable Research to Sustainable Research Lives: Reflections from the SPROUT Network Event

From Sustainable Research to Sustainable Research Lives: Reflections from the SPROUT Network Event REF Code of Practice consultation is open!

REF Code of Practice consultation is open! BU Leads AI-Driven Work Package in EU Horizon SUSHEAS Project

BU Leads AI-Driven Work Package in EU Horizon SUSHEAS Project Evidence Synthesis Centre open at Kathmandu University

Evidence Synthesis Centre open at Kathmandu University ECR Funding Open Call: Research Culture & Community Grant – Apply now

ECR Funding Open Call: Research Culture & Community Grant – Apply now ECR Funding Open Call: Research Culture & Community Grant – Application Deadline Friday 12 December

ECR Funding Open Call: Research Culture & Community Grant – Application Deadline Friday 12 December MSCA Postdoctoral Fellowships 2025 Call

MSCA Postdoctoral Fellowships 2025 Call ERC Advanced Grant 2025 Webinar

ERC Advanced Grant 2025 Webinar Update on UKRO services

Update on UKRO services European research project exploring use of ‘virtual twins’ to better manage metabolic associated fatty liver disease

European research project exploring use of ‘virtual twins’ to better manage metabolic associated fatty liver disease