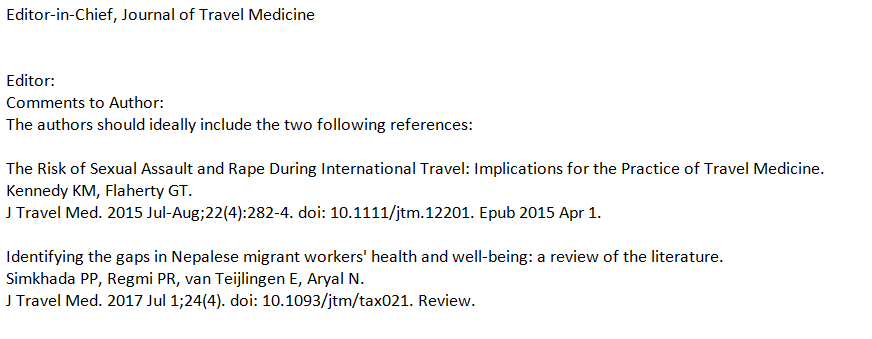

Left to right: Alan Sinfield, Tamsin Wilton and Alexander Doty

Whilst researching a new Level 5 ‘Media Perspective’ unit (Life Stores and the Media) for the Department of Media Production, I decided to discuss the concept of ‘dissident reading’ within the lectures, relating the work of Alan Sinfield in this area. In doing this, I not only checked out if our library had the relevant book Cultural Politics – Queer Reading, which we did, but also I thought that I would just check out (online) what Alan is working on now.

Alan Sinfield had been a catalyst in my research journey, as way back in 2004 when I was in the final stages of my PhD, Alan had invited me to speak at a research seminar workshop at the University of Sussex. I remember that Alan was a little critical of my interest in the ‘carnivalesque’, but largely supportive. That seminar offered me a great experience in developing my ideas for the eventual PhD at Bournemouth, and it provided me with a much-needed psychological boost, as the PhD submission date loomed. I remember at the time I had asked Alan some probing questions regarding his new research interests. Alan’s work was fundamental in developing gay and lesbian studies in theatre and popular culture. He replied that he was working on something new, concerning ageing. It was remiss of me to not follow up on this, despite having more contact with the University of Sussex in other areas later on, such as working with Sharif Molabocus who contributed to two of my edited collection books, and also working there as an external PhD examiner. On searching for Alan’s latest work, I discovered that he had passed away last year, aged 75.

In thinking through my meeting with Alan in 2004, I had not realized that soon after this he would retire, as Parkinson’s disease would effect his speech. Now I maybe understand Alan’s interest in writing about ageing, at a time when his life must have been changing. The loss of Alan also made me think about others in the LGBT and queer studies media research community who I have met that are no longer with us.

Before I was accepted to study my PhD at Bournemouth, I had applied to the University of the West of England. When the panel interviewed me, I met Tamsin Wilton, whose ground-breaking book was entitled Immortal, Invisible: Lesbians and the Moving Image. While I did not get the doctoral scholarship at UWE, Tamsin confided in me that her research was mostly done within her own time, suggesting that at that time the department thought her work was ‘too radical’. Tamsin passed away in 2006, only a few years after we met, and I remember thinking how much we have lost in her passing, her work was revolutionary, and she genuinely encouraged me to press on with my research, in times when LGBT studies were less popular.

Besides the loss of Alan Sinfield and Tamsin Wilton, I cannot forget the sudden loss of Alexander Doty. Similar to meeting Alan and Tamsin early in my research journey, I briefly met Alex when he was presenting at the feminist Console-ing Passions Conference in Bristol in 2001, a conference that I would eventually co-organise this year at Bournemouth. In 2001, I was studying for an MA at Bristol, and I had never been to an academic conference before, but we were required as students to help out. I remember attending Alex’s paper on the TV series Will and Grace, and I had a brief conversation with him over coffee. Somehow, I made some links between his ideas, and those that I was studying, and I am forever grateful to Alex for his work, and his non-pretentious demeanour. Although if I am honest, I was a little in awe of him, and at the time I could have never imagined that I could have published my academic work.

So I think, often we encounter inspirational researchers along the way, at conferences, seminars, symposiums, and even in interviews. For me, the loss of Alan Sinfield, Tamsin Wilton and Alex Doty, almost seems too much to bear, as clearly they had far more to offer, despite their remaining stellar work. In the manner where I discussed the legacy of Pedro Zamora (the HIV/AIDS activist) and the meaning of a life cut short, theoretical and political ideals potentially live on. Our task is not only to remember all that potential, but also to continue it in any way we can.

BU has an agreement with Springer which enables its authors to publish articles open access in one of the Springer Open Choice journals at no additional cost.

BU has an agreement with Springer which enables its authors to publish articles open access in one of the Springer Open Choice journals at no additional cost.

On 4 September 2018, 11 national research funding organisations, with the support of the European Commission including the European Research Council (ERC), announced the launch of cOAlition S, an initiative to make full and immediate Open Access to research publications a reality. It is built around Plan S, which consists of one target and 10 principles.

On 4 September 2018, 11 national research funding organisations, with the support of the European Commission including the European Research Council (ERC), announced the launch of cOAlition S, an initiative to make full and immediate Open Access to research publications a reality. It is built around Plan S, which consists of one target and 10 principles.

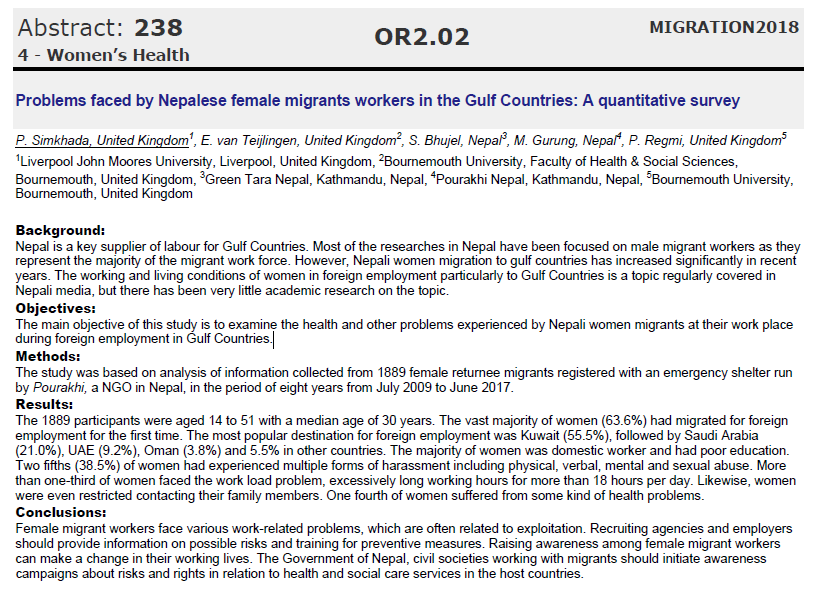

New CMWH paper on maternity care

New CMWH paper on maternity care From Sustainable Research to Sustainable Research Lives: Reflections from the SPROUT Network Event

From Sustainable Research to Sustainable Research Lives: Reflections from the SPROUT Network Event REF Code of Practice consultation is open!

REF Code of Practice consultation is open! ECR Funding Open Call: Research Culture & Community Grant – Apply now

ECR Funding Open Call: Research Culture & Community Grant – Apply now ECR Funding Open Call: Research Culture & Community Grant – Application Deadline Friday 12 December

ECR Funding Open Call: Research Culture & Community Grant – Application Deadline Friday 12 December MSCA Postdoctoral Fellowships 2025 Call

MSCA Postdoctoral Fellowships 2025 Call ERC Advanced Grant 2025 Webinar

ERC Advanced Grant 2025 Webinar Update on UKRO services

Update on UKRO services European research project exploring use of ‘virtual twins’ to better manage metabolic associated fatty liver disease

European research project exploring use of ‘virtual twins’ to better manage metabolic associated fatty liver disease