We’ve been inundated with words – Ministerial speeches this week, (g)rumblings over social mobility continue, and Parliament showed no signs of slowing down as they hurtled towards recess. Plus lots to look forward to as we look to the next horizon. With that in mind, for BU readers we have issued a horizon scan in our separate occasional series. And for staff outside BU, we have published the summary on the Lighthouse Policy Group blog site.

Spending Review

The Chancellor launched the comprehensive spending review which will conclude in the Autumn. Priorities:

- strengthening the UK’s economic recovery from COVID-19 by prioritising jobs and skills

- levelling up economic opportunity across all nations and regions of the country by investing in infrastructure, innovation and people – thus closing the gap with competitors by spreading opportunity, maximising productivity and improving the value add of each hour worked

- improving outcomes in public services, including supporting the NHS and taking steps to cut crime and ensure every young person receives a superb education

- making the UK a scientific superpower, including leading in the development of technologies that will support the government’s ambition to reach net zero carbon emissions by 2050

- strengthening the UK’s place in the world

- improving the management and delivery of our commitments, ensuring that all departments have the appropriate structures and processes in place to deliver their outcomes and commitments on time and within budget

Note that the government response to the Augar report and the conclusion to the Review of Post-18 Education and Funding is also due with the outcome of the review in the Autumn as confirmed by the Minister last week. This was (last) promised in the Autumn last year with the comprehensive spending review that never happened because of the election…..(seems so long ago!).

So with Augar in mind, perhaps, Boris took to the Telegraph last weekend to announce the Cabinet Office is considering restructuring HE fees. His piece sits behind a pay wall so we include the coverage from Wonkhe and Research Professional below.

- Wonkhe: At the weekend, the Prime Minister unexpectedly revealed that variable fees may be back on the agenda. He said in an interview that Number Ten is reviewing the “pricing mechanisms” of university courses, in a move that could see “reductions in the cost of science and engineering degrees, with higher fees for some arts subjects.” We learn that Johnson believes that what went wrong was everybody charged the “maximum whack”, because no institution felt that they could accept the loss of prestige associated with offering a course that was cheaper. “In reality, it would have been much more sensible if courses had been differently priced. We are certainly looking at all that.” If that seems familiar, that’s because it sounds eerily similar to an Australian government announcement last month. Could we be heading in the same direction?

- Research Professional: It would be easy to lump Johnson’s comments in with the recent spate of government attacks on ‘low-value courses’. Both education secretary Gavin Williamson and universities minister Michelle Donelan have in recent weeks used language that alluded to their beliefs on varying course quality, and it would appear that Johnson shares their concerns. By financially disincentivising study in the arts and humanities, you risk creating the perception that these broad disciplines (and all the cultural and societal capital they contain) are the sole reserve of the moneyed elite. Education for the poor is all about getting a job; education for the rich is about personal enrichment and development. And getting a job, too, of course—which doesn’t seem to have been too much of a problem for Williamson (social sciences degree), Donelan (history and politics) or Johnson (literae humaniores).

Research Professional have full coverage here and here.

The piece also states the Government intends to support FE the same way Governments have supported HE. Does this ring alarm bells for anyone else? Tuition fees for FE? Even if fees started small (as they did with HE) this would be a major change and many would argue a blow for social mobility (even if they are income contingent). Of course this is jumping the gun and what the Government probably actually meant by that statement is providing a good level of funding for the FE sector. However, FE fees must have crossed the Government’s mind – apart from anything else, the contrast between apprenticeships (paid for by employers and much grumbled about by government because the levy has not achieved what they wanted) and tuition fees is interesting.

There was lots of commentary following the Telegraph article on Twitter. This one summed it up for Sarah (read the two comments too!) and this one posts excerpts of the article.

Parliamentary questions

HE restructuring regime announced

The Government announced their scheme to support HE institutions in financial difficulties (detail here). They call it a ‘restructuring regime’ because it is – any institutions needing to access the loan to keep themselves afloat can expect full intervention – chopping courses and curtailing services to only deliver the aspects the Government considers in the public interest (fitting national priorities and any local demonstrated needs). The Government are clear the support isn’t for all providers, so if the Government doesn’t think the institution can deliver in the national interest it will be allowed to close – Government’s intention is not to provide a blanket bail-out to the sector. It is not a guarantee that no organisation will fail (WMS). If that happens the OfS student protection arrangements will come into force – see later in this section for the consultation on those.

- An independently-chaired Higher Education Restructuring Regime Board will be established, which will include input from members with specialist knowledge external to Government. The Board will advise the Minister.

- It’s a repayable loan only offered as last resort measure with specific conditions that align to wider Government objectives: The scheme aims to support the important role universities play in their local economies, and preserve the country’s science base.

- If eligible, providers will need to comply with conditions ({harsh ones, see below) to focus the sector towards the future needs of the country, such delivering high quality courses with good graduate outcomes. As a condition for taking part in the scheme, universities will be required to make changes that meet wider Government objectives, depending on the individual provider’s circumstances. This could include ensuring they deliver high quality courses with strong graduate outcomes, improving their offer of qualifications available, and focusing resources on the front line by reducing administrative costs, including vice-chancellor pay.

Conditions would be determined on a case-by-case basis, but there are some baselines:

- Clear economic and value for money case for intervention

- The need for support is because of C-19 (ie not underlying and longer-term financial weakness) and there must be a clear and sustainable model following the restructure (i.e. once the Government has provided the loan the provider won’t fail anyway).

- Providers will only be supported if their collapse would cause significant harm to the national or local economy/society (examples given are the loss of high-quality research or teaching provision, a disruption to COVID-19 research or healthcare provision or overall disruption to policy objectives including a significant impact on outcomes for students).

- Providers must comply with their legal duties to secure freedom of speech. (How can this be demonstrated? And have the DfE just zipped through the last 5 University Minister’s pronouncements and added their pet projects in? Probably not or accommodation costs might have appeared (Skidmore). And they haven’t gone as far as printing costs Gyimah –although nearly, see below). So no, this is probably purely political.

- The provider must continue to meet the OfS’ regulatory requirements.

- In exploring whether the Government will provide support the institution must cooperate with independent business review advisers.

The business review will address a number of things, and this is where the direction of travel really becomes clear:

- Provision – removing any duplication of provision, cutting out the dead wood and refocusing the offer on high-quality courses with strong student outcomes, low drop-out rates and high proportions of graduates achieving the high-skilled employment measure which ALSO develop skills aligned to local and national economic and social employment needs.

- Revising the level of provision – could any of the provision be more effectively delivered at Level 4 or 5 or through a local FE college?

- Governance – strengthening governance and leadership

- Efficiency – reviewing admin costs to identify cost savings and efficiencies to achieve future sustainability (senior pay, professional services, selling or repurposing assets to repay loans, fund restructuring, closing down unviable campuses or provision, considering mergers and options for consolidation and service sharing including with FE). The growth of administrative activities that do not demonstrably add value must be tackled head on.

- Ensuring student protection – current students to complete the course on which they enrolled or an equivalent course by remaining at the same or transferring to a different provider.

Some other choice snippets:

- The funding of student unions should be proportionate and focused on serving the needs of the wider student population rather than subsidising niche activism and campaigns. (On this RP say: Is the government really suggesting that representation of students is overfunded? And are universities expected to interfere further in what student unions get up to?)

And this is telling:

- We would encourage any provider looking to restructure outside the regime also to bear in mind the statement of policy direction set out in this document, to align with our overall strategic direction for HE in England.

- Public funding for courses that do not deliver for students will be reassessed.

- It is probable that the sector in 2030 will not look the same as it does now

The DfE have done a fantastic job of setting out all the Government’s priorities. This is not just about the restructuring regime, as it says very clearly, this is about government’s priorities for HE going forwards.

Research Professional point out that: Access and widening participation get no mention. And on post-graduation employment that doesn’t reach the ‘graduate’ level threshold they ask – what if the benefits of taking a [degree] course are mainly about gaining better opportunities than are available without it?

SoS Gavin Williamson stated: We need our universities to achieve great value for money – delivering the skills and a workforce that will drive our economy and nation to thrive in the years ahead. My priority is student welfare, not vice-chancellor salaries.

These latest rescue measures are not available to independent HE providers who do not already receive public funding. Alex Proudfoot, Chief Executive of Independent Higher Education, said:

- The coronavirus pandemic has generated huge disruption and uncertainty in all walks of life, and higher education is no exception. We welcome the Government’s recognition that some providers may run into difficulties through no fault of their own…Independent higher education providers do not expect a bailout, but they do expect their Government to take ownership of the nationwide lockdown it ordered, and to recognise the impact this decision had in the form of significant lost revenues from the cancellation of intakes and the crucial summer short course market. Businesses in the hospitality and tourism sectors were rightly helped to make up some of the income lost from closures. No less of a helping hand should be extended to education businesses – SMEs, for the most part – for whom the lockdown directly caused cancellations which have hit their bottom line. They urgently need the same consideration of a Business Rates Holiday and targeted grants to sustain them past this difficult period, and allow them to play their part in the essential task of retraining the many thousands of workers displaced by this crisis.

It would be a difficult task for the Government to extend a similar ‘restructuring regime’ bailout to the independent sector (not least because the DfE don’t have their heads around these type of providers yet) but Alex makes a key point in highlighting the lack of arrangement for this sector. This Government is keen for the independent providers to be a success (and suitable competition to drive up standards in regular HE), these private providers are also a crucial part of the bridge between industry and education in the Government’s technical and vocation pathways agendas. Yet these providers may go under before the FE reforms are enacted.

RP comment:

- The terms of the bailout for universities facing financial difficulties are such that no vice-chancellor could ever accept them—a merger would be a preferable option to being run by the board of the Restructuring Regime.

- The consequence will be that in order to avoid straying into financial hot water, university managers will be much more hawkish than they might otherwise have been over cost-cutting. That means more job losses than might have been the case. Intentionally or not, the government has fired the starting pistol on a kind of anti-furlough scheme for university staff.

- Not only will universities be reducing capacity at a time when they should be gearing up for the unprecedented investment promised by the R&D roadmap, but an exit from the restrictions imposed by the coronavirus pandemic depends largely on the success of university research teams like those working on vaccines…

- The promising results coming out of these labs are not the product of lone geniuses but the outcome of a research ecosystem, which includes cross-border collaboration, and an institutional pyramid, which involves teaching and research in subjects other than the life sciences.

- Those who are calling the shots in government education policy—this does not necessarily include Williamson and Donelan—are listening to voices telling them that the cure to the UK’s productivity problems is higher technical skills. However, the UK does not have an economy with extensive opportunities in advanced manufacturing—80 per cent of GDP is generated by services, which require graduates with broad and flexible skills.

- Rather than producing graduates for the UK economy as it is, the education system is being pushed into training people for an economy that does not exist. Williamson wants a German-style further education system, but he does not have at his disposal the several centuries it took to build up the German guild system and the Marshall Plan that built up the German manufacturing base—or the euro, which sustains it.

OfS: consultation on new powers to intervene

Alongside this the OfS announced a consultation on a new targeted condition of registration allowing the OfS to intervene more quickly where providers are at material risk of closure and therefore there is increased risk to students’ studies. It is intended the new powers continue post pandemic: The implementation of proposals set out today would help ensure the protection of students on an ongoing basis, both during and after the pandemic.

OfS are proposing that providers comply with specific directions to take action to protect students such as:

- continuing to teach existing students before closing

- making arrangements to transfer students to appropriate courses at other universities and colleges

- awarding credit for partially completed courses and awarding qualifications where courses have been completed

- offering impartial information, advice and guidance to students on their options and next steps

- enabling students to make complaints and apply for refunds or compensation where appropriate

- archiving records so that students can access evidence of their academic attainment in the future.

Nicola Dandridge, Chief Executive OfS, said:

- Our regulatory approach has extended the protection available to students if their course, campus or provider closes. We had intended to consult on measures to strengthen our ability to protect students, including from the consequences of provider closure. But with financial risk heightened during the pandemic, it has become clear that we need to prioritise some elements of those plans. Nobody wants a university or college to run into financial trouble, but where this happens, it is vital that students are able to complete their studies with as little disruption as possible and receive proper credit for their achievements.

- This proposed condition would ensure that we are able to act swiftly and decisively where there is a material risk of closure. We have been clear that, as a regulator, we wish to reduce unnecessary burden on higher education providers. For the vast majority of universities and colleges that are in a sound financial position, these changes will not have any effect.

- This is a carefully targeted and proactive measure to protect students, particularly during these uniquely challenging times. Where universities and colleges are at material risk of closure, we will ensure that our focus is on the needs of students.

Meanwhile over in FE…

The Government (DfE) has set out proposals to strengthen relationships with colleges and promote better planning within FE provision. The Dame Mary Ney review on the financial oversight of colleges concluded 9 months ago and has now been published by the Government along with their response. It proposes:

- Strengthened alignment between the Further Education Commissioner and Education and Skills Funding Agency (ESFA)

- A regular strategic dialogue led by the ESFA and Further Education Commissioner’s team with all college boards around priorities, starting from September 2020

- New whistle-blowing requirements for colleges, including publication of policies on college websites

- A review of governance guidance to strengthen transparency

- A new College Collaboration Fund round

More changes are expected to be announced when the Government publish their FE White Paper (due Autumn).

Some key excerpts from the written ministerial statement:

Survey – Despite the Government’s intentions to invest in FE the Association of Colleges’ summer 2020 survey has revealed that colleges are providing hardship support exceeding the costs of what they have available, laptops and connectivity resource doesn’t cover enough of the disadvantaged learners and redundancies are imminent. The report states:

Redundancies:

- 46% of colleges are planning to make redundancies by the end of the autumn term 2020.

- 21% will have made redundancies by September 2020.

Hardship

- 88% of colleges have evidence of increased student hardship.

- 90% of colleges report that their bursary / hardship funds are under more pressure as a result of the Covid-19 pandemic.

- 56% of colleges reported that their existing and additional bursary funding from DfE has not enabled them to purchase laptops and/or connectivity (dongles) to support all their disadvantaged learners.

- 78% of colleges would need additional resources to support the provision of free college meal vouchers to current eligible students over the summer holiday period.

The level of additional resource needed to meet these hardship needs ranged from £20,000 to £2,000,000 with an average of £300,000 per college.

And on transport:

- Four out of five colleges anticipate major transport difficulties around September re-opening.

Comments from FE sector on student hardship:

- Support from government is not on a level playing field with schools.

- We are providing meal vouchers over the summer, but we can’t really afford to do this.

- Digital poverty is a major concern and pressure.

- Access to a laptop without broadband is of limited use.

- Laptop bursaries are wholly insufficient to meet demand.

- Free transport would make a huge difference to learners. Removal of the oyster for travel will increase pressure on young people.

- Bursary allocation has been reduced for next year.

FE Vision – The Independent Commission on the College of the Future has published People, productivity and place: a new vision for colleges – a vision for the college of the future, accompanied by a collection of short essays and case studies about the civic role of colleges.

Education Committee

Here is more coverage on what was said at last week’s Education Committee hearing with Minister Michelle Donelan. We covered this last week but the transcript wasn’t out. You can read it in full here.

Another ministerial speech

We’ve had quite a number of speeches on HE recently – Boris, Gavin, Michelle and Shadow Minister Emma Hardy have all spoken multiple times over the last few weeks. The Minister spoke again this week as part of the Festival of HE (even though it finished over a week ago). Research Professional (RP) cover the speech which reiterated the same themes as her previous speeches and appearance in front of the Education Committee:

- Much of her address centred on what we may now be able to call the minister’s hobbyhorse: differentiating between what she terms “true”, “genuine” social mobility and the old social mobility…

- She stated: I want to continue to make clear the passionate importance I place on achieving genuine social mobility…true social mobility is when we put students and their needs and career ambitions first—be that in higher education, further education or apprenticeships.

- RP continue: To be fair to Donelan, it is too simplistic to say that simply attending university will be beneficial for everyone. There are good arguments to be made about how we measure social mobility…

- Donelan: True social mobility is not getting them to the door, it’s getting them to the finish line of a high-quality course that will lead them to a graduate job.

The focus away from access only and onto student support, achievement and careers and post-graduation support is all within the current Access and Participation Plans. The big access-only push hasn’t existed since the demise of Aim Higher (and even then Aim Higher did so much more). So the Minister is really only bringing a focus to the aims the work is already pushing for – although likely (to use the words of a former access Tsar) the Government will want further, faster, progress. And this is the sticking point for most HE institutions – fast progress is not easy to come by.

RP state:

- Donelan’s example raises many questions and legitimate problems. What it does not do is offer any evidence that slowing the expansion of higher education will address those issues. Surely it would be better, for example, to look at why progression rates are lower among some groups of students, rather than concluding simply that university is not for them. Although the Government may argue that this is what the sector has been doing – to little avail.

- It also seems fair to ask why those with the same qualification but a different colour of skin are not being hired into top roles (although that is also a fair question to ask of universities specifically). It seems unlikely that the sole cause of this career discrepancy is their attendance at a university—and even less likely that their progress would have been more in line with that of white graduates had they opted not to attend university at all.

- It would be wrong to paint the minister’s speech as another lecture on social mobility—that was only one part of the address. However, until Donelan addresses the issue of whether the demographic make-up of university attendees “matters” or not following her comments last week, it may be the issue that comes to define her tenure.

RP also have this article on the other aspects of Donelan’s speech in which the Minister called for more modular courses for adult learners lamenting the continued norm for three year degrees.

- Donelan said she was “determined” the government would “support our universities to become more flexible” and deliver more modular courses, as “now is the time to innovate”…. modular courses are “tremendously desirable to adult learners looking to upskill, and it is likely to be more important than ever as the economy recovers from coronavirus”.

- “If Covid has taught us one thing in reference to the higher education sector, it is how flexible it is – let’s utilise this flexibility. Now is the time to build on the recent innovation we have seen.

The Minister feels shorter courses are particularly important for portfolio careers.

- The minister also stressed she wanted to see “more emphasis on part-time learning that links with labour market needs and skills gaps”, more degree apprenticeships, and more higher technical education at Levels 4 and 5. She also spoke favourably about two-year degrees.

- “I…want the sector to think more about how it delivers learning differently,” she said. “Sadly, the three-year bachelor’s degree has increasingly become the predominant mode of study. But that doesn’t suit all students. Many young people would like to earn while they learn.”

Donelan also stated she wanted to see the practice of making conditional unconditional offers “end for good”. “I don’t want to see students making decisions that are not in their best interests. Quite frankly, there is no justification for such practices. Once again Donelan shows herself out of touch with those students for whom unconditional offers were originally designed. But maybe she doesn’t mean them.

The Guardian have a good article taking issue with the Government’s new stance whereby they want less pupils progressing to university and have glossed over the disadvantaged groups access. The article challenges the Government:

- “We need to create and support opportunities for those who don’t want to go to university, not write them off,” says the education secretary. On this point, he’s right. But let’s be clear: it is the Conservative government who wrote off non-academic pupils as if they were bad cheques. Successive Tory education secretaries have made it harder to pass GCSEs, chopped vocational qualifications from the school curriculum, slashed FE funding by eye-watering amounts, and complicated the apprenticeship system so badly that only half as many young people now starting an apprenticeship as in 2017.

- If Williamson wants us to believe that further and technical education can genuinely have parity in the future and this whole narrative isn’t just a way of pulling up the drawbridge on university access, here are a few things he could do.

- First, if there are going to be fewer undergraduate places, in order to preserve social mobility a large portion should be reserved for those from low-income families or areas. Second, if apprenticeships are for everyone, not just “other people’s kids”, then why not set another target? Make it so that every school is expected to send, say, 25% of young people on to apprenticeships. No exceptions for grammar schools. No exceptions for schools in leafy areas.

- Finally, make student maintenance loans (the ones used for living on) available to those on vocational routes. A big draw of university life is the ability to live independently, using the loan and topping it up with part-time work. Apprentice wages are often too low to live on, and long hours make top-up work difficult. Fund vocational routes fairly and the calculation becomes very different.

- Do this, and Williamson’s announcement suddenly looks like a genuine revolution. Until then, I fear it’s just another way of cutting back and preventing people like me from going into higher education. Unimaginable? Not any more.

And the shadow minister speaks too

Shadow HE Minister Emma Hardy spoke at the Convention for HE (RP cover it here – see second half of article) and today in Conversation with HEPI.

RP report she commented on the Government’s social mobility stance:

- More incisive were Hardy’s words on the issue of the week: does the background of the people who enter university matter, or is it getting in the way of “true social mobility”? In short, the shadow minister’s answer was: Yes, it matters.

- “The government’s response to the looming university financial crisis has been to launch an attack on the sector, accusing it of offering ‘low-value degrees’,” she said, “astonishingly implying that if you don’t come from a family where your parents have gone to university, you could be tricked into attending university.

- “To enable real choice for everyone, the government should be focused on identifying the barriers to learning and breaking them down, not establishing more.

- “We cannot ever see a situation again where education is viewed as a privilege for the few and not a right for all… No country’s economy has grown on the back of reducing access to higher education. It matters which groups in society get access to university.”

On the Government’s rescue HE restructure package RP report that Emma stated:

- Labour cannot countenance the loss of a single university,” she said. “At a time when the country is facing the possibility of the deepest recession in its history—when unemployment is set to soar and when retraining and reskilling will be more needed than ever—the government’s position is beyond rational comprehension.”

Emma Hardy’s focussed speech during this week’s Conversation with HEPI was interesting. Labour’s approach to HE agrees with several of the Government’s themes, however, the devil really is in the detail, and there was a world of difference in tone. She is a big fan of FE and HE working together seamlessly to deliver for their local communities. Of course, the Government have been pushing HE to support and work with the full range of local institutions from primary up for years. Labour’s approach is ‘work with to deliver’ not the ‘done to’ sharing of expertise that Theresa May originally envisaged.

Themes:

- A passion for degree apprenticeships and level 4 & 5 qualifications, however, Emma took issue with the Government’s universal aim for all HE institutions to offer them stating offering degree apprenticeships is dependent on the local area – and this relies on the local infrastructure and Government investment to achieve that infrastructure in the area. She stated that the Government’s new found love of this sector is ringing hollow.

- Post qualification admissions – the sector shouldn’t block a change in approach and it shouldn’t be dropped because it is just so difficulty to do. Instead Emma favoured a cross-sector collaborative approach dealing with the difficult elements in turn, gradually adjusting each aspect from FE to HE to produce a new system that works across the board.

- Emma wasn’t opposed to student number caps (as a temporary solution) in principle, however she disagreed with the Government’s method and stated they haven’t got rid of the competition as intended. Looking to the future she wouldn’t rule out a form of student number limitation, however, she said she was new to the sector and hadn’t formulated her firm position on the matter yet.

- Key differences were Labour’s approach to social mobility which opposes the reduction in courses and allowing universities to fail (although their position on private providers wasn’t stated). Labour believes genuine choice is needed particularly across disadvantaged groups whose personal life circumstances (such as caring responsibilities) and the limitations of the geographic area they live within may already limit available choices. Emma stated that no university should be allowed to fail – failure impacts on choice, aspiration and the local economy…potentially creating cold spots. Unsurprisingly Emma supported the civic university model.

- While overall Emma was against differential fees and intervention to cut courses she did allow that some courses did need looking at. She also stated the sector shouldn’t leave itself vulnerable to lazy attacks [from the Government and media].

- Emma was firmly behind the new 5 year targets in the Access and Participation Plans stating they could be revolutionary, changing the make up of universities, changing the system.

- She also disagreed with the Government’s focus on graduate employment stating the rise in child poverty and early services cuts will undermine social mobility and that it is unfair of the Government to have removed such services and then blame the HE sector for not churning out socially climbing graduates.

Admissions

The OfS have updated their series of reports on unconditional offer making.

As we know, they, and the government, believe that unconditional offers are unconditionally bad, except where they are used to admit students based on a portfolio or similar. That much is very clear after the chaos of the moratorium/proposed retrospective ban/final new licence condition that we have worked through over the last few months. You can read more about the licence condition in our update from 9th July in case you missed it.

Their press release makes it fairly clear;

- The Office for Students (OfS) has today reiterated that unconditional offers risk pushing students into decisions that are not in their best interests, as updated analysis shows that young people who accepted unconditional offers before sitting their A-level exams are less likely to continue into their second year of study.

- The analysis finds that, even after controlling for a range of characteristics associated with dropout rates, A-level entrants who accepted an unconditional offer in 2017-18 had a continuation rate between 0.4 and 1.1 percentage points lower than would have been expected had they taken up a conditional offer instead. This translates to between 70 and 175 of the 15,725 A-level entrants placed through unconditional offers that year.

- UCAS analysis published in December 2019 found that applicants holding an unconditional offer in the 2019 cycle were, on average, 11.5 percentage points more likely to miss their predicted A-level grades by three or more grades. Today’s analysis by the OfS suggests that this lower A-level attainment then results in higher dropout rates in higher education.

- The OfS has also previously highlighted that ‘conditional unconditional’ offers – now banned until September 2021 because of concerns around unfair admissions practices during the coronavirus (COVID-19) pandemic – distort student choice and could be seen as akin to ‘pressure selling’.

- Nicola Dandridge, chief executive of the OfS, said today:

- ‘It is becoming increasingly clear that unconditional offers can have a negative impact on students. Unconditional offers can lead to students under-achieving compared to their predicted A-level grades, choosing a university and course that may be sub-optimal for them, and ultimately being at increased risk of dropping out entirely.

- ‘Dropout rates are overall low in England, so this is a small effect. But we remain concerned that unconditional offers – particularly those with conditions attached – can pressure students into making decisions that may not be in their best interests, and reduce their choices. It is particularly important that we allow students the space to make informed decisions at this time of increased uncertainty, which is why we have temporarily banned ‘conditional unconditional’ offers during the pandemic.

- ‘It is in everyone’s interests for students to achieve their full potential at school, enrol on a higher education course that best fits their needs, interests and aspirations, and succeed on that course.’

Their analysis says (excerpts):

- The number of unconditional offers being made has continued to grow. In 2019, four in 10 applicants had at least one offer with an unconditional component and over a quarter received at least one ‘conditional unconditional’ offer. Meanwhile, the proportion of students receiving at least one ‘direct unconditional’ offer rose modestly (0.5 percentage points since 2018) and the proportion receiving ‘other unconditional’ offers fell slightly (0.2 percentage points since 2018).

- As previously reported, there is little evidence that applicants placed through an unconditional offer are either more or less likely to enrol the following autumn. Regardless of whether they hold an unconditional offer, around three per cent of applicants placed through UCAS are not identified as starting higher education in the same year, or at the intended higher education provider. Applicants that have come through ‘other UCAS routes’ are roughly equally likely to be identified as starting higher education as those placed through conditional or unconditional offers.

- For entrants in 2015-16, 2016-17, and now 2017-18, a lower proportion (more than 1 percentage point) of those entering with unconditional offers continued with their studies after the first year, compared with those who enter with conditional offers.

- By contrast, the model estimates that 2017-18 BTEC entrants with unconditional offers were between 0.3 and 2.6 percentage points more likely to continue with their studies, relative to being placed through a conditional offer. The model estimates that between 15 and 135 additional BTEC entrants in that year continued with their studies having been placed through an unconditional offer, instead of a conditional offer. This is out of 5,115 BTEC entrants placed through unconditional offers in our 2017-18 modelling population.

- In addition, when looking at the different types of unconditional offer, A-level entrants in 2017-18 who were placed through ‘conditional unconditional’ or ‘direct unconditional’ offers are shown to be less likely to continue with their studies, relative to those placed through a conditional offer, after controlling for the same factors as above. ‘Direct unconditional’ offers are found to have the largest negative relationship with continuation rates (between -0.9 and -2.4 percentage points), compared to ‘conditional unconditional’ offers (between -0.1 and -1.0 percentage points). We find no statistically significant difference between the continuation rates of A-level entrants placed through ‘other unconditional’ offers and those placed through conditional offers.

- In our modelling of continuation rates, we control for predicted entry qualifications, instead of achieved qualifications, so that the model estimates include the impact of unconditional offers on Level 3 attainment reported by UCAS. Annex D contains results from an alternative model which controls for achieved entry qualifications instead (see Model II). This model finds no statistically significant association between unconditional offers and continuation for A-level entrants in all entrant years available. This would be consistent with what would be found if poorer performance at A-level, relative to predicted grades for those placed through unconditional offers, were driving the lower continuation rates of these entrants

NSS

The National Student Survey outcomes were published (interactive charts are here). Last week we highlighted the OfS analysis which suggested C-19 didn’t have a real terms impact on the responses given to the survey. This week OfS have published summaries of the data from the national position (the charts can be viewed here).

And as reported last week on the negative side students continued to report comparatively lower rates of satisfaction with how their courses are organised and how effectively changes are communicated by their university or college.

Research Professional report on the NSS. And Wonkhe have a blog examining which factors that improve teaching practice may improve positive outcomes within the NSS. The blog is keen on consistency.

Student Finance

The APPG for students has published Reforming Student Finance: Perspectives from Student Representatives.

Student finance and cost of living

- Students generally felt that current levels of maintenance support are inadequate.

- Support should be increased and non-repayable means-tested maintenance grants should be reintroduced

- The system should better recognise the diversity of students and their particular needs

- Tailored support should be offered to certain students through better means testing

- Accommodation was identified as the main cost, with prices having increased significantly over the last decade – in many cases exceeding the maintenance loan available

- The relative reduction in maintenance funding was a concern as household income thresholds had not risen with inflation

- Other issues which were raised included travel costs, childcare costs, and that the current London weighting does not address the different costs of living all around the UK

- Consideration could be given to moving to monthly payments, instead of the current termly instalments

Effects

- Many students respond to the shortfall in money they faced, if they do not have savings or money from their family to plug the gap, by taking up significant amounts of part-time work and taking out commercial loans leading to serious effects on student wellbeing and mental health.

- Attainment can also be affected both by the financial stress and through the impact of taking on too much part-time work on their ability to study.

- The increase in drop-out rates over the last five years must be seen as a consequence of the above.

- Student representatives were clear that the current funding system reproduces existing social injustices, as these issues predominantly affect those from poorer or otherwise disadvantaged backgrounds.

Information, advice and guidance

- Student representatives generally felt that there was not adequate information, either nationally or through institutions, about various costs of being a student. The ‘hidden costs’ of studying were a particular concern, e.g. textbooks and graduation – students felt they had not been made aware of these enough. Furthermore, the lack of information around funding, particularly for postgraduate students, was a real issue.

- Student representatives were also angry about the way that UCAS advertised commercial loans.

Differences between funding agencies across the UK

- The timing of students receiving payments was raised as a major issue of difference

- The four funding agencies also differ in their treatment of estranged students

- All agencies require and allow different levels of input from staff

Other issues identified

- Students felt there needs to be better support for distance learning.

- Changes were proposed to ‘lifelong learning’ to allow students to undertake further study later in life .

- Nursing, midwifery and allied healthcare students – a shortage of funding for these courses was identified; students on these courses were much less able to take on part-time work alongside their studies to support themselves.

- The lack of support for childcare was a key concern, as well as a lack of information or support for placements

Loans – Quick repayment facility

Meanwhile Martin Lewis has attacked the Student Loans Company new website. He isn’t a fan and has called the new repayment tool irresponsible and dangerous, stating it gives UK graduates a damaging and demoralising picture of their debts by exaggerating their outstanding loans. He is campaigning against the quick repayment facility on the website and tries to remind the public that they are only required to repay 9% of their earning above the threshold. He stated that unless huge overpayments were made well above the 9% it makes very little difference to the overall debt, except for the reduction in income that the individual will feel (flushing money away unnecessarily). The BBC report that Lewis intends to write to Michelle Donelan to request the quick repayment facility be removed immediately. Given that it may increase the monies returning to the public coffers and Donelan’s track record of toeing the party line his campaign may fall on deaf ears – or only result in a reminder that they don’t have to make overpayments near the facility on the website.

Research Professional cover the argument, including the SLC’s response to Lewis.

Opportunity areas expansion

Donelan announced the expansion of the Opportunity Areas programme (additional £18 million) which will twin the 12 Opportunity areas with places facing similar challenges to help unleash the potential among young people in other parts of the country. It is the fourth year of funding for the Opportunity Areas programme and this year will focus on tackling the exacerbation caused by C-19. Catch up schooling, teacher recruitment and training, and early speech and language development are priorities to help narrow the attainment gap within the Opportunity areas. The article details some of the approaches currently in place to help these areas ‘level up’. Approaches include: improving the quality of careers advice, work experience, digital and other skills for employment, holiday clubs, support for excluded pupils, and opportunities to develop confidence, leadership and resilience.

Devolved news: Wales

Welsh Universities and Colleges will benefit from the announcement of a £50m support package to help them cope with the impact of the coronavirus crisis (£27m for HE; £23m FE). The support is part of the Welsh Government’s actions to support students and Wales’ major education institutions and provide the skills and learning in response to the economic impact of the coronavirus.

The HE Recovery Fund will support universities to maintain jobs in teaching, research and student services, invest in projects to support the wider economic recovery, and support students suffering from financial hardship.

The FE funds will increase teaching support following students’ time away from education due to C-19 virus and help new students with their transition to post-16 learning. Up to £5 million will be provided to support vocational learners to return to college to help them achieve their licence-to-practice qualifications, without needing to re-sit the full year.

An extra £3.2 million will be used to provide digital equipment such as laptops for FE students. £100,000 will also be provided to support regional mental health and wellbeing projects and professional development in Local Authority Community Learning.

Civic engagement rankings

King’s College London, the University of Chicago and the University of Melbourne have published Advancing University Engagement: University engagement and global league tables stating that universities need to better demonstrate their value to society. Universities around the world are making a positive impact through engagement but, unlike teaching and research, this is rarely recognised or celebrated. The report calls for societal impact to be recognised in university rankings and proposes a new framework to measure and rank this impact, or ‘engagement’ to be incorporated into global university league tables. The authors suggest this would encourage universities to ensure more of their activities benefit local communities and wider society, while better showcasing the existing benefits they produce.

Richard Brabner, Director UPP Foundation (which established the Civic University Commission), said:

- … it would be much better if existing and new rankings included the value universities bring to society so that they provide a more comprehensive picture of our sector. The report provides an important contribution to this debate and includes a sensible range of indicators. I particularly welcome the report’s focus on embedding civic engagement within the curriculum. This is vital to fostering a student’s long-term commitment to service, as well as developing the skills and attributes to thrive in the outside world.

- I would however caution any perception that a global ranking can define whether a university is civic or not. A truly civic university focuses on the needs of its community and region. It is important these local factors drive the activities of a civic university, not the benchmarks or indicators in a ranking.

Public Attitudes to Science

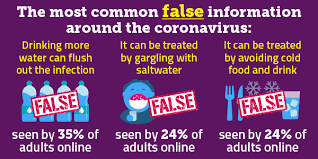

BEIS published the 2019 edition of Public attitudes to science which looks at the UK public’s attitudes to science, scientists and science policy. The survey shows that public opinion places scientists and engineers as the trustworthy professionals, that the public is supportive of spending on ‘blue skies’ research, and that UK adults are becoming more familiar with, and more adaptable to, an ever-accelerating pace of technological change. It also looks in depth on attitudes towards an ageing society, AI and robotics including their use in healthcare, genome editing within food security, and micro pollution and plastics. It finds the public continues to have an appetite for information about science and mainly use television, online news platforms and Facebook to stay informed. However, they’re unsure if they can trust media reporting of science issues – with online sources particularly mistrusted.

- Of the range of scientific applications asked about, vaccination and renewable energy attracted near universal public support, while driverless cars, GM crops, nuclear power, and the use of animals in research were the most contentious. However, on balance, more people felt that the benefits outweighed risks in almost all technology areas asked about. The one clear exception was driverless cars, where more people felt the risks outweighed the benefits: only 23% agreed that driverless car technology will be safer than human drivers. It is notable that support for vaccination remained very strong (only 4% felt that the risks outweigh the benefits) despite prevailing media attention around the ‘anti-vaccination’ movement.

On the ageing society:

- The large majority (84%) would prefer to live only for as long as they can ensure good quality of life

- Two thirds (64%) of people had heard about life extension technologies to slow the ageing process, and 31% would choose this if available. However, people were more negative (62%) than positive (31%) about the impact this would have on society, with people concerned about burden on the NHS and increased taxes for working people.

- When asked about different enhancement technologies to assist older people in later life, the public was largely in favour of cognitive enhancing drugs (80%) and to a lesser extent robotic clothing to improve mobility (59%). However, there was much less support for more medically invasive technologies such as brain chip implants to improve intelligence and cognition (24%).

- 54% had heard about the idea of robot caregivers to help older people and around half (45%) said that they would use one, either for themselves in later life or for a relative. People felt more comfortable with the idea of a robot helping with household tasks (61%) or healthcare (57%) than providing companionship (29%). Eight in ten (80%) thought that robot caregivers would lead to older people having less human contact.

On AI and robotics:

- Nine in ten people (90%) had heard about the idea that AI and robots could begin to take over many human jobs, beyond the more routine jobs. Most working people recognised that aspects of their job could be automated in the future: 51% thought that their job could be at least partially automated within the next 5 years, rising to 69% within a 20-year timeframe. Overall, 49% considered this to be a ‘good thing’ for society and 45% ‘a bad thing’

- A large majority felt comfortable about AI and robots being used to support a human doctor to make a diagnosis or recommend treatment (80%), or in surgery (71%). The opposite was found in relation to technology replacing human doctors, where a clear majority felt uncomfortable in both contexts (respectively 81% and 75% felt uncomfortable about technology replacing doctors in diagnosis and surgery). While 58% believed AI and robots used in healthcare will accelerate progress in medicine, only 37% thought that it could surpass the accuracy of human doctors. The chief concern was related to loss of contact.

- For the purposes of developing healthcare-related AI, the large majority were willing to share their personal health data with the NHS (90%). People were somewhat less willing to share their data with research organisations (73%) and the government (61%), and much less willing to share their data with private companies (35%). This echoed earlier findings that the public is uncomfortable with the role of the private sector in scientific development.

- There was widespread disapproval of the use of data to target online adverts and for political campaigning

Research

EU Budgets – The EU R&D budgets have come back well below the level expected. Research Professional report:

- Heads of EU member states agreed their stance on a 2021-27 budget of €1,074bn (£970bn) for the bloc in the early hours of this morning, along with a €750bn Covid-19 recovery fund for 2021-23, after four days of tense negotiations.

- The budget would devote €75.9bn to the Horizon Europe R&D programme, topped up with €5bn from the fund. The Erasmus+ mobility programme would get €21.2bn, all from the budget. These totals are much less than the European Parliament and research and education institutions wanted, so all eyes are now on the Parliament to see whether it will veto the deal.

This RP article has more detail and the responses from the European research alliances and guilds.

UUK, GuildHE, the Russell Group and European bodies have signed this joint statement aiming to reach agreement by addressing the sticking points on UK participation in Horizon Europe. It addresses:

- Demonstrating UK commitment to the programme

- Ensuring a fair financial contribution through a ‘two-way’ correction mechanism

- Accepting EU oversight of the use of programme funds

- Agreeing to introduce reciprocal mobility arrangements to support the programme

- Clarifying that the results of research can be exploited beyond the EU

The statement argues that, with enough will on both sides, it should be possible to reach an agreement before the Horizon Europe programme is due to begin in January whilst acknowledging that time is rapidly running out.

UKRI – UK Research and Innovation (UKRI) published their (146 page) Annual Report and Accounts (2019-20). The report recognises the stated significant milestones and achievements as the work supporting the research and innovation community during the COVID-19 pandemic, and the delivery of significant

PhD to Academia – a challenging transition – HEPI published PhD students and their careers examining the professional ambitions of PhD students. Key findings:

- Most PhD students (88%) believe their doctorate will positively impact their career prospects.

- PhD students are equally more (33%) and less (32%) likely to pursue a research career now than before they started their PhD, with the majority choosing academic research (67%) or research within industry (64%) as a probable career path.

- PhD students feel well trained in analytical (83%), data (82%) and technical (71%) skills, along with presenting to specialist audiences (81%) and writing for peer-reviewed journals (64%).

- They are less confident of their training in managing people (26%), finding career satisfaction (26%), applying for funding (22%) and managing budgets (11%).

- When considering future careers, PhD students are more likely to attend career workshops (76%) and networking events (60%) or to do their own research (64%) than to discuss options with an institutional careers consultant (13%).

The report incorporates qualitative research that captures the voices of PhD students:

- ‘I don’t feel qualified or prepared to enter a career outside of research.’

- ‘The requirement to move around in pursuit of short term postdocs is terrible for social and family life’.

- ‘The academic culture will be detrimental to my mental health.’

Post report the media picked up on the above stated difficulties of moving into an academic career for an early career researcher.

Research parliamentary questions covered:

And an oral question:

Q – Daniel Zeichner (Lab) (Cambridge): What steps he is taking to secure the future of UK research and development.

A – Amanda Solloway (The Parliamentary Under-Secretary of State for Business, Energy and Industrial Strategy): The Government are now implementing their ambitious R&D roadmap, published earlier this month, reaffirming our commitment to increasing public R&D spending to £22 billion by 2024-25 and ensuring the UK is the best place for scientists, researchers and entrepreneurs to live and work.

Q – Daniel Zeichner: I appreciate the recent announcements, but can the Minister reassure us that all universities will be able to access those loans, with freedom to invest in line with local priorities? Will she take a look at the proposals from the new Whittle laboratory in Cambridge, which needs to match the already secure £23.5 million in private sector funding to develop the first long-haul zero-carbon passenger aircraft?

A – Amanda Solloway: I give my assurance that one of the things we are addressing in the roadmap is ensuring that we become a science superpower. Within that, we are levelling up across the whole of the country. I am committed to making the workplace diverse and ensuring that we have a culture that embraces that throughout the whole of country. We will ensure that UK scientists are appreciated and rewarded.

And another oral question:

Mr Sheerman:…The Minister has a business background, so does she not realise that if she could persuade the Chancellor of the Exchequer to follow Mrs Thatcher’s example and introduce a windfall profit tax on people who have made a lot of money—the gambling industry and companies such as Amazon—that could be ploughed into research and development? Universities will go through a tough time in the coming months and years, so let us put real resources into research and development as never before.

Amanda Solloway:…The hon. Gentleman will be aware that we have a taskforce that has been looking into how to support universities. It has enabled us to set up a stability fund, which will enable R&D to continue in our institutions. In addition, in the roadmap, which contains the place strategy, we are talking about lots of levelling up. We are making sure we have the opportunity to take this forward and become the science superpower that we all want to be.

Disability

Advance HE have published Three months to make a difference a booklet detailing seven areas which are challenging for disabled students alongside recommendations for their resolution. It draws on the content raised in a series of roundtables run by the Disabled Students’ Commission (supported by OfS). It highlights that rapid action is needed to ameliorate for the additional challenges C-19 creates for disabled students.

- Provide disabled applicants with support and guidance that is reflective of the COVID-19 pandemic in the clearing process

- Ensure ease of access to funding for individual level reasonable adjustment

- Ensure student support meets and considers the requirements of disabled students during the pandemic

- Consider disabled students when making university campuses and accommodation COVID-19 secure

- Facilitate disabled students’ participation in welcome and induction weeks and ongoing social activities

- Ensure blended learning is delivered inclusively and its benefits are considered in long-term planning

- Embed accessibility as standard across all learning platforms and technologies

Parliamentary question: Disabled students’ allowances

- Q (Mr Barry Sheerman) (Huddersfield): To ask the Secretary of State for Education, what assessment he has made of the effect of his Department’s announcement of 6 July 2020 on (a) changes to the Disabled Students’ Allowance and (b) the introduction of a maximum allowance of £25,000 applying to both full-time and part-time undergraduate and postgraduate recipients of that allowance on those students with the highest needs.

- A: Michelle Donelan: Regulations will be laid in Parliament to effect this policy change along with the other elements of the student finance package for the 2021-22 academic year. An equality analysis will be published alongside that. The date that these regulations will be laid is yet to be confirmed.

- Also Donelan confirmedthat for the vast majority of students receiving DSA funding greater than £25,000, this was driven by funding for the DSA travel grant, which will continue to remain uncapped.

Degree Classifications

UUK have published a press release stating that universities from across the UK have agreed new principles to tackle grade inflation, reconfirming the sector’s strong collective commitment to protect the value of qualifications. There are 6 principles and recommendations to be guided by when deciding the final degree classifications awarded to students. The principles will be added to the UKSCQA statement of intent which outlines specific commitments universities have made to ensure transparency, fairness and reliability in the way they award degrees.

The press release explains:

Alongside six new principles which cover the importance of providing clear learning outcomes, regular reviews, student engagement and transparency in algorithm design, the report includes examples of recommended good practice in the following areas:

- Discounting marks – academic experimentation and risk taking by students are important elements to course design and learning, but there should not be the option of discounting core or final year modules. Clear instructions on how discounting applies to the final award and progression through their degree must be provided to students.

- Border-line classifications – there should be a maximum zone of consideration of two percentage points from the grade boundary. Rounding, if used, should occur only once, and at the final stage.

Only one algorithm should be used to determine degree classifications and this should be clearly stated to students at the beginning of their studies.

- Weighting given to different years within degrees – divergence from the four outlined models(p6; principles) should be limited.

Research Professional write about the announcement and comment:

- …universities today find themselves playing catch-up with the government’s perceptions of higher education through a new commitment to tackling grade inflation…

- The pledge is suitably vague so as not to impinge on institutional autonomy, covering four ill-defined areas: ensuring assessments continue to stretch and challenge students; reviewing and explaining how final degree classifications are calculated; supporting and strengthening the system of external examiners; and reviewing and publishing data and analysis on students’ degree outcomes.

- Greater clarity over algorithms used to calculate degree classifications is in the frame. While there are plans to limit grade borderline boundaries and to stop discounting low grades in final year work. There will also be periodic reviews of how the system works.

- Whether this statement of intent will make much practical difference to degree awards in the long run remains to be seen. However, it does show that universities are increasingly preoccupied with responding to the bêtes noires of ministers.

Nursing

The Royal College of Nursing has published Beyond the Bursary: Workforce Supply. It states to get more people into the nursing degree and successfully graduating in England, the Government must provide appropriate support both on entering and throughout the degree. This report details our modelling, undertaken by London Economics, which demonstrates the level of funding required to increase the number of applicants to the nursing degree. It calls on the Government to immediately:

- reimburse tuition fees or forgive current debt for all nursing, midwifery, and allied health care students impacted by the removal of the bursary

- abolish self-funded tuition fees for all nursing, midwifery, and allied health care students starting in 2020/21 and beyond

- introduce universal, living maintenance grants that reflect actual student need.

Parliamentary question:2020 nursing applications so far

PQs

- No detriment approach to assessment.

- Care leaver outcomes

- On the adequacy of predicted grades Donelan stated the disadvantaged groups were more likely to be OVERpredicted: Black applicants were proportionally 19% more likely to be overpredicted compared with White applicants. Disadvantaged applicants (measured using POLAR) were 5% more likely to be overpredicted compared with the most advantaged applicants.

Inquiries and Consultations

Click here to view the updated inquiries and consultation tracker. Email us on policy@bournemouth.ac.uk if you’d like to contribute to any of the current consultations.

Recess

Parliament is winding down. The House of Commons enters recess at the end of this week, with the Lords following shortly after. Although MPs will return to deal with constituency business and take their summer holidays Ministerial business will continue to tick over with possible, HE announcements during this traditional down period. We’ll continue to monitor the latest developments and keep you informed periodically through the policy update. We anticipate the policy update will be shorter and we won’t send it every week throughout the summer period we have our own recess too, after a very busy few months.

Other news

Levelling up: Guardian article on creating the MIT of the North.

Admissions: iNews has an article based on comment from an Ofqual former board member which states that universities should check with schools what teachers originally predicted because a ‘significant minority’ of students are likely to end up with the ‘wrong’ final grades.

Boys ambition: The Guardian has an article based on research by UCL suggesting boys are more ambitious than girls and more likely to reach to apply to higher tariff institutions. They suggest it could be a factor in explaining the gender pay gap. It also suggests that having firm or ambitious university plans means higher GCSE grades too (compared to pupils with looser intentions).

- Nikki Shure, one of the co-authors of the research, said the data found boys to be more ambitious than girls regardless of family income. That ambition often translated into attending higher-status universities and more lucrative careers, which may help explain the gender pay gap.

- “The goal of this paper is not just to tell girls to be more ambitious, but to get young people to think about making concrete, specific university plans. Of course it is a good idea to foster high-attaining girls to be ambitious and provide them with the information and support to achieve these goals,” Shure said.

- “It is interesting that a boy and a girl at the same school with the same prior attainment and same family background have substantially different university plans,” she said.

- “Making more ambitious plans could help narrow the gap, but of course it is not going to eliminate the gender pay gap, given issues around different returns to different courses even at the same institution.”

- The research team found a similar pattern among pupils who were immigrants or the children of immigrants, who were more likely to aim for selective universities than their classmates. “First- and second-generation immigrants are hence much more academically ambitious than their peers of British heritage, even when they are otherwise from a similar background, of similar academic ability, and attend the same school,” the paper states.

- Shure said: “One of the main things that we want to highlight is that making a concrete goal matters for academic performance. The young people in this data set had to type out the name of the top three universities to which they plan to apply, which requires some agency and thought.

- “We are definitely not saying that everyone needs to plan to apply to a Russell Group university, but rather make a concrete plan to which they can work.”

Brace yourself: Diana Beech (ex HEPI, ex-Universities Minister’s policy advisor) writes for Research Professional’s Sunday Reading reflecting on the last year of Parliament’s HE changes and anticipating what is still to come. Here are a couple of excerpts looking forward:

- Questions nevertheless remain on what the government will do about newer institutions, which might not have the resources and reserves to weather the oncoming storm but that remain anchor institutions in their local communities—creating jobs, attracting investment and producing hugely employable and socially valuable, but not necessarily high-earning, graduates. Depending on location, letting institutions like these fail may not tally with pledges to ‘level up’ the regions. So there are sure to be major turf wars ahead if the government is intent on convincing the electorate that levelling up, in this instance, means siphoning some places off and levelling their opportunities down.

- Other battlefields to watch out for could include…measures to engineer student choice towards subjects leading directly to a particular job—all of which we could see addressed in the long-awaited response to the Augar review due in the autumn.

- The past 12 months have been turbulent for universities, and the political roller-coaster shows no signs of slowing yet. Higher education would do well to brace itself for further challenges and changes ahead.

And that’s what we think too Diana!

Subscribe!

To subscribe to the weekly policy update simply email policy@bournemouth.ac.uk.

JANE FORSTER | SARAH CARTER

Policy Advisor Policy & Public Affairs Officer

Follow: @PolicyBU on Twitter | policy@bournemouth.ac.uk

A video recording of the roundtable on Public Diplomacy and “what is next after COVID-19” is now available

A video recording of the roundtable on Public Diplomacy and “what is next after COVID-19” is now available  atures that she co-ordinated as a volunteer. The Group has grown fast to over 100 members worldwide and brings together scholars investigating topics related to public diplomacy, nation branding, country image and reputation, public relations for and of nations, as well as political, global and cultural communication influencing international relations. She organized the 2018 doctoral and postdoctoral Public Diplomacy preconference in Prague and the 2019 Washington “Public Diplomacy in the 2020s”, including a panel hosted by the US Department of State.

atures that she co-ordinated as a volunteer. The Group has grown fast to over 100 members worldwide and brings together scholars investigating topics related to public diplomacy, nation branding, country image and reputation, public relations for and of nations, as well as political, global and cultural communication influencing international relations. She organized the 2018 doctoral and postdoctoral Public Diplomacy preconference in Prague and the 2019 Washington “Public Diplomacy in the 2020s”, including a panel hosted by the US Department of State.

w weeks, Parliament has seen a surge in need for access to research expertise as it engages with the COVID-19 outbreak.

w weeks, Parliament has seen a surge in need for access to research expertise as it engages with the COVID-19 outbreak.

UK Turing Scheme: My student mobility programme in Nepal

UK Turing Scheme: My student mobility programme in Nepal Bournemouth University psychologists publish new book

Bournemouth University psychologists publish new book Connecting Research with Practice: FoodMAPP Secondment in Austria and France

Connecting Research with Practice: FoodMAPP Secondment in Austria and France Health promotion paper read 8,000 times

Health promotion paper read 8,000 times The Beautiful Work Challenge: On Birth

The Beautiful Work Challenge: On Birth MSCA Postdoctoral Fellowships 2025 Call

MSCA Postdoctoral Fellowships 2025 Call ERC Advanced Grant 2025 Webinar

ERC Advanced Grant 2025 Webinar Horizon Europe Work Programme 2025 Published

Horizon Europe Work Programme 2025 Published Horizon Europe 2025 Work Programme pre-Published

Horizon Europe 2025 Work Programme pre-Published Update on UKRO services

Update on UKRO services European research project exploring use of ‘virtual twins’ to better manage metabolic associated fatty liver disease

European research project exploring use of ‘virtual twins’ to better manage metabolic associated fatty liver disease