Welcome to the first update of 2024, which brings you up to date with what happened before the holidays.

We’ve provided pop out documents so those with a keen interest in each topic can read more detailed summaries.

We’ve the latest on the Renters (Reform) Bill, REF has been delayed until 2029, we summarise the Government’s response and commitments following the Nurse Review on research landscape, the UK celebrates official association with Horizon Europe, and we’ve gone in depth on international students bringing the hottest debate from the Parliamentary Chambers over the last few weeks.

I’ll be experimenting with some new approaches this year to make sure that the update is useful and relevant to as many people as possible: any feedback gratefully appreciated.

Quick parliamentary news

Schools and post 16 education: The Education Committee questioned SoS for Education, Gillian Keegan on the Advanced British Standard (ABS). Keegan stated that the ABS was being introduced to allow for more time, greater breadth, and better parity of esteem between technical and academic qualifications. The consultation on the new qualification is expected to be released soon.

Marking boycott: Gillian also stated that the marking assessment boycott was outrageous and damaging to the brand image of the sector. She stated the consultation on minimum service levels would help consider if it was helpful to equip universities with an additional tool to alleviate the impact of disruption. We introduce you to this consultation here.

Education oral questions: Minister Keegan also responded to education oral questions in the Chamber on Monday.

Healthcare students: A Westminster Hall debate, pay and financial support for healthcare students, was held following three petitions on the topic. We have a short summary of the debate provided by UUK here. Prior to debate the House of Commons Library provided a useful briefing on the matter (full briefing here, useful short summary here).

HE challenges: Minister Halfon spoke at the THE conference to set out his 5 ‘giants’ – the 5 challenges he believes HE faces in this decade and beyond: HE reforms, HE disruptors, degree apprenticeships, the lifelong learning entitlement, and artificial intelligence and the fourth industrial revolution. The speech is worth a quick read.

DSIT campus: DSIT is moving many of its roles to a base in Manchester. It’s part of the government’s Places for Growth programme, a civil service wide commitment to grow the number of roles outside of London and the south-east to 22,000 by 2027. Details here.

REF 2029

REF has been delayed from 2028 to 2029 to allow for additional time to implement the big changes the 2029 REF exercise will entail. Research England state the delay is in recognition of the complexities for HEIs in:

- the preparation for using HESA data to determine REF volume measures

- fully breaking the link between individual staff and institutional submissions, and

- reworking of institutional Codes of Practice

The REF Team is working through dependencies in relation to this change, including the on-going work on people, culture and environment. We will provide an updated timeline as soon as possible.

More information on the detail behind the changes here. Research Professional has a write up here and here. Wonkhe coverage here.

Research: Nurse Review – Government response

The Government published Evolution of the Research, Development and Innovation Organisational Landscape, its response to the Nurse Review of the Research, Development and Innovation Organisational Landscape which began in 2021 and published the review outcomes in March 2023.

There are a large range of actions and approaches the Government has committed to take. Including

- Developing a comprehensive map of the UK’s clusters of RDI excellence, to be published in the coming months.

- Boost support for universities in areas with lower levels of R&D investment through the Regional Innovation Fund, which provides £60 million funding across the UK in 2023/24.

- Publishing a breakdown of DSIT’s R&D budget over the financial years 2023/24 to 2024/25.

- Invest £20 billion into R&D per annum by 2024/25 (this isn’t all new money!)

The Government state they will pilot innovative organisational models, embed data, evidence and foresight into their approach, maximise the impact of public sector RDI organisations and expand philanthropic funding into research organisations. The Government call on everyone within the sector to play their part, recognising the central role of DSIT as a single point of leadership and coordination.

The full 62 page detail is here, or you can read the key points in our pop out document.

Previous reports and letters relating to the Nurse Review are here. UKRI’s reaction to the Government’s response is here.

There’s also a parliamentary question on the Review and research funding:

Q – Chi Onwurah MP: [edited] with reference to the Government response to the Nurse Review what the (a) milestones, (b) deliverables and (c) timelines are for the review of the future of QR research funding.

A – Andrew Griffith MP: The Review of Research England’s (RE) approach to Strategic Institutional Research Funding (SIRF) which includes quality-related research (QR), will assess the effectiveness of unhypothecated research funding for Higher Education Providers. It will assess the principles and assumptions underlying current approaches and evaluate implementation. The review, set for 2024, will update the evidence on SIRF’s impact, enhance transparency, and engage the Higher Education sector. RE will commission an independent review on the ‘Impact of SIRF’ in December 2023 and stakeholder workshops in Summer 2024. Any changes to funding approaches will not be implemented before Academic Year 2026-2027.

Wonkhe delved into the government response in their usual pithy fashion making short work of a glaring omission:

- It’s reckoned, on average, that the average research council grant covers around 70 per cent of the cost of performing research, rather than the 80 per cent it is supposed to. It was hoped that the government’s response to the Nurse review of the research landscape, published last week, would address this. It did not. Those hoping to see the full economic cost issue addressed saw it balanced against the overall project funding pot and the availability of other research funding, particularly QR allocations – with the implication being that a bump to one would result in losses to at least one of the others.

More analysis available in Wonkhe’s blog: DSIT published its response to the Nurse review of the research landscape, but there’s not much evidence of the unifying strategy Nurse asked for. James Coe breaks it down.

Research: Quick News

Horizon: On 4 December the UK formalised its association to the Horizon and Copernicus programmes. DSIT also announced their aim to maximise participation in Horizon with funding of up to £10,000 available to selected first time applicant UK researchers to pump prime participation, via a partnership with the British Academy and other backers. SoS Michelle Donelan stated: Being part of Horizon and Copernicus is a colossal win for the UK’s science, research and business communities, as well as for economic growth and job creation – all part of the long-term decisions the UK government is taking to secure a brighter future. UUK Chief Executive, Vivienne Stern MBE, said: This is a momentous day. I am beyond delighted that the UK and EU have finally signed the agreement confirming the UK’s association to Horizon.

There are several recent interesting parliamentary questions:

Research Funding: parliamentary question (edited) – Chi Onwurah MP – whether the £750 million of R&D spend is in addition to existing R&D funding (paragraph 4.49 of the Autumn Statement 2023).

Answer – Andrew Griffith MP: As a result of the UK’s bespoke deal on association to Horizon Europe and Copernicus, the government has been able to announce substantive investment in wider research and development (R&D) priorities. The £750 million package is fully funded from the government’s record 2021 Spending Review funding settlement for R&D. This includes £250 million for Discovery Fellowships, £145 million for new business innovation support and funding to support a new National Academy of mathematical sciences. These are transformative new programmes that maximise opportunities for UK researchers, businesses and innovators. We will also continue to deliver a multi-billion-pound package of support through the existing Horizon Europe Guarantee.

Regional inequalities: parliamentary question (edited) – Baroness Jones of Whitchurch: what steps the Government is taking to reduce regional inequalities in government-funded research and development.

Answer – Viscount Camrose: The Levelling Up White Paper (published in February 2022) committed to a R&D Levelling Up Mission, recognising the uneven distribution of gross R&D (GERD) spending across the UK. DSIT is delivering this mission to increase public R&D investment outside the Greater South-East by at least 40% by 2030, and at least one-third over this spending review period. We are making progress through investing £100 million for 3 Innovation Accelerators (Greater Manchester, West Midlands and Glasgow) for example, and investing £75 million for 10 Innovate UK Launchpads, £312 million for 12 Strength in Places Fund projects and £60 million for the Regional Innovation Fund.

Research Bureaucracy Review: Parliamentary question (edited) – Baroness Jones of Whitchurch: when the Government intend to implement the final report of the Independent Review of Research Bureaucracy published in July 2022.

Viscount Camrose: The Government is committed to addressing the issues set out in the Independent Review of Research Bureaucracy. We are working with other government departments, funders and sector representative bodies to finalise a comprehensive response to the Review and will publish it in due course. In the meantime, government departments and funding bodies have begun implementing several of the Review’s recommendations. We have established a Review Implementation Network, bringing together senior representatives from across the research funding system, to deliver the recommendations of the review and maintain momentum on this issue.

Independent Research Funding: DSIT announced an application round for the £25m Research and Innovation Organisation Infrastructure Fund. The fund will provide grants to research & innovation organisations to improve their national capabilities and is open to independent research and innovation bodies in the UK for funding for new small and medium scale research equipment, small and medium scale equipment upgrades, or small and medium scale facility upgrades. DSIT aim for the fund to address market failures in the funding landscape identified by the Landscape and Capability Reviews, therefore improving the R&I infrastructures available to RIOs, improving the quality of the national capabilities they provide and enabling them to better serve their users and the UK.

Spin outs: The Government published the independent review of university spin-out companies. The review recommended innovation-friendly policies that universities and investors should adopt to make the UK the best place in the world to start a spin-out company. To capitalise on this the government intends to accept all the review’s recommendations and set out how it will deliver them. You can also read the UKRI response here. We have a quicker read summary of the review here.

EDI: Remember the furore over the SoS intervene when Michelle Donelan ousted a member of UKRI’s EDI group for inappropriate social media posts/views? A recent parliamentary question on the matter tries to get behind the investigation to find out how it commenced.

Q – Cat Smith (Labour): To ask the Secretary of State for Science, Innovation and Technology, who authorised the reported gathering of information on (a) the political views and (b) related social media posts of members of the UKRI EDI board; and how much money from the public purse was expended in the process of gathering that information.

Answer – Andrew Griffth:

- After concerns were raised about the social media activity of a member of a public body advisory panel, the Secretary of State requested information on whether other members of the group were posting in a manner that might come into conflict with the Nolan Principles. Minimal time was taken by special advisers to gather information already in the public domain.

- Information is not gathered by special advisers on the views or social media of staff working in higher and further education, except in exceptional circumstances, such as this, where it supports the Secretary of State to reach an informed view on a serious matter.

Life Sciences: We introduced the autumn statement in the last policy update. However, we’re drawing your attention to the content announcing the £960 million for clean energy manufacturing and £520 million for life sciences manufacturing aiming to build resilience for future health emergencies.

Quantum: DSIT published the National Quantum Strategy Missions. The missions set out that:

- By 2035, there will be accessible, UK-based quantum computers capable of running 1 trillion operations and supporting applications that provide benefits well in excess of classical supercomputers across key sectors of the economy.

- By 2035, the UK will have deployed the world’s most advanced quantum network at scale, pioneering the future quantum internet.

- By 2030, every NHS Trust will benefit from quantum sensing-enabled solutions, helping those with chronic illness live healthier, longer lives through early diagnosis and treatment.

- By 2030, quantum navigation systems, including clocks, will be deployed on aircraft, providing next-generation accuracy for resilience that is independent of satellite signals.

- By 2030, mobile, networked quantum sensors will have unlocked new situational awareness capabilities, exploited across critical infrastructure in the transport, telecoms, energy, and defence sectors.

Research concerns: Research Professional publish the findings of two of their own research surveys: concerns over pressure to publish, predatory journals and culture issues. More here.

Regulatory

You’ll recall earlier this year the Industry and Regulators Committee delivered criticism and called for improvements to be made by the OfS in the way it engages with and regulates the HE sector. Recently the OfS wrote to the Committee to set out their response. The OfS confirmed their commitment to act on the Committee’s findings and set out these actions:

Engagement with students

- Expanding our existing plans for a review of our approach to student engagement, to consider more broadly the nature of students’ experiences in higher education, and to identify where regulation can address the greatest risks to students.

- Reframing of the role of our student panel – designed to empower students to raise the issues that matter to them.

Relationship with the sector

- Robust, two-way dialogue is key to regulation that works effectively in the interests of students.

- We have significantly increased our engagement with institutions in response to feedback, and this will be an ongoing priority.

- The Committee’s report gives further impetus to that work with colleagues across the sector to reset these important relationships.

Financial Sustainability of the sector

- We agree that the sector is facing growing risks and we are retesting our approach to financial regulation in this context, including developing the sophistication of our approach to stress-testing the sector’s finances.

The content the OfS provides in its response document at pages 4-26 pads out the above headline statements with more detailed plans and context and touches on wider topics such as free speech, value for money, and the regulatory framework. Read it in full here.

Research Professional discuss the main elements here (in rather a more polite tone than you might usually expect from them). Meanwhile Wonkhe summarise recent IfG content: the OfS

- needs to assert its independence better – and the government must refrain from “frequent meddling” in the regulator’s work. These are among the conclusions of the Institute for Government think tank in its assessmentof the government and OfS responses to the Lords Industry and Regulators Committee report. It suggests that OfS’ dual role as regulator and funder is creating confusion, and that this issue was not sufficiently explored in the committee’s inquiry.

Renters (Reform) Bill – Committee Stage

The Renters (Reform) Bill completed Committee Stage and is waiting for a date to be considered at Report Stage in the House of Commons. We have a pop out document for you listing the most relevant information on the Bill in relation to student rental accommodation.

Mental Health

Nous and the OfS published a report on student mental health: Working better together to support student mental health – Insights on joined-up working between higher education and healthcare professionals to support student mental health, based on a ten-month action learning set project.

Separately, NHS Digital published the wave 4 findings as a follow up to the 2017 Mental Health of Children and Young People (MHCYP) survey. Overall, rates of probable mental disorder among children and young people aged 8 to 25 years remained persistently high, at 1 in 5, compared to 1 in 9 prior to the pandemic.

PTES

Advance HE published the Postgraduate Taught Experience Survey results:

- 83% of students were satisfied overall with their experience, up 1% since 2022, and the highest since 2016 and 2014 when it also reached 83%.

- Satisfaction levels among non-EU overseas students have continued to increase and now exceed by a sizeable margin those of UK students across all measures of the postgraduate experience.

Consideration of leaving their course

- 18% of postgraduate taught students had considered leaving their course and, of those, the number who cited financial difficulties increased from 8% in 2022 to 11% in 2023.

- UK students were considerably more likely to consider leaving their course than overseas students (29% of UK students considering leaving in comparison to, for example, students from India, of whom only 6% had considered leaving).

- Women and non-binary students were more likely to consider leaving their course, as were those who studied mainly online.

- Students who had free school meals as children were more likely to consider leaving their course, particularly because of financial difficulties, and this differential continued even among students aged 36 and above.

PRES

Advance HE also published the postgraduate research experience survey.

- 80% postgraduate researchers express overall satisfaction with their experience at their institution.

- Researchers working mostly or completely online were less satisfied than those who worked mostly or completely in-person.

- The largest gaps in satisfaction between ethnicities focused around the opportunities provided for development activity with Black students a lot less likely to have been offered (or taken up) teaching experience and other development opportunities.

- Among those considering leaving, cost of living is an increasingly important factor in how they view their challenges.

Jonathan Neves, Head of Business Intelligence and Surveys at Advance HE, said: It is positive to see nearly four out of five PGRs satisfied with their experience and there is encouraging feedback about research. But we should note that this is not for all groups. Institutions will also wish to explore why some – females and minority groups, in particular – are experiencing lower levels of satisfaction and at the same time to look at ways to address a gradual fall in satisfaction over time.

Student Loans

The Student Loans Company (SLC) published the latest figures covering student financial support for the academic year 2022/23 and the early in year figures for the academic year 2023/24, across England, Wales and Northern Ireland. In England:

- 3% decrease in higher education student support in academic year 2022/23, at £19.7 billion.

- Number of full-time Maintenance Loans paid remains relatively consistent to the previous year, at 1.15 million.

- In 2022/23, as the last Maintenance Grant-eligible students conclude their courses, the % of full-time maintenance support attributable to grants falls below 0.1%.

- Provisional figures indicate a potential 1.1% decrease in the number of Tuition Fees Loans paid on behalf of full-time students.

- Continued decrease in the number of Tuition Fee Loans paid on behalf of EU (outside UK) students, due to the change in policy in 2021/22.

- 1% decrease in the number of Tuition Fee Loans paid on behalf of part-time students.

- Tuition Fee Loan take-up for accelerated degrees continues to increase.

- 3% of all full-time loan borrowers took only a Tuition Fee Loan and opted out of Maintenance Loan support – consistent with the previous two years.

- 7% decrease in the number of Postgraduate Master’s Loans issued in 2022/23.

- Provisional figures for 2022/23 indicate a potential first, yet small decline in the take-up of Postgraduate Doctoral Loans.

- Finalised figures confirm a 5.9% increase in the number of full-time students claiming Disabled Students’ Allowance in academic year 2021/22.

- 3% increase in the amount claimed in Childcare Grant, reaching £244.1 million in 2022/23.

- By end-October 2023, a total of 1.17 million undergraduates and postgraduates have been awarded/paid a total of £4.81 billion for academic year 2023/24.

- Early look at academic year 2023/24 shows a continued decline in the number of EU (outside UK) students paid, due to the funding-policy change in 2021/22.

- Early figures indicate a potential 4.5% reduction in the number of new students receiving student finance in academic year 2023/24.

A parliamentary question on the revision of the calculation formula used to determine overseas earnings thresholds for student loan repayments for English and Welsh students who live overseas or work for a foreign employer determine the review isn’t forthcoming. Minister Halfon confirmed it would require a legislative amendment to make changes to the formula.

There’s also a House of Commons Library briefing on students and the rising cost of living. It considers how students have been affected by escalating costs and what financial support is available. The Library briefings are useful because they support non-Minsters to understand debate topics better whilst formulating their opinions, and it provides them with facts and figures from which to engage in the debate. The full briefing is 34 pages long but there’s a shorter high level summary here.

Wonkhe blog: For the first time in almost a decade we have official figures on the income and expenditure of students in England. Jim Dickinson finds big differences between the haves and have-nots.

Graduate Employment

The Graduate Job Market was debated in the House of Lords. Lord Londesborough opened the session asking the government what assessment they have made of the jobs market for graduates, and whether this assessment points to a mismatch between skills and vacancies.

Baroness Barran spoke on behalf of the government stating that one-third of vacancies in the UK are due to skills shortages and that the HE sector delivers some of the most in-demand occupational skills with the largest workforce needs, including training of nurses and teachers. The DfE published graduate labour market statistics showing that, in 2022, workers with graduate-level qualifications had an 87.3% employment rate and earned an average of £38,500. Both are higher than for non-graduates.

Undetered Lord Londesborough pressed that we have swathes of overqualified graduates in jobs not requiring a degree (he stated the figure was 42-50%) and that graduate vacancies are falling steeply, as is their wage premium, and students have now racked up more than £200 billion of debt, much of which will never be repaid.

The debate also touched on regional differences in graduate pay, the importance of the creative industries which require a highly skilled workforce, the teacher skills shortage and whether tuition fees should be forgiven for those becoming teachers, and health apprentices not covered by the levy. You can read the full exchange here.

AI in jobs

The DfE published analysis on the impact of AI on UK jobs and training. It finds:

- Professional occupations are more exposed to AI, particularly those associated with more clerical work and across finance, law and business management roles. This includes management consultants and business analysts; accountants; and psychologists. Teaching occupations also show higher exposure to AI, where the application of large language models is particularly relevant.

- The finance & insurance sector is more exposed to AI than any other sector.The other sectors most exposed to AI are information & communication; professional, scientific & technical; property; public administration & defence; and education.

- Workers in London and the South East have the highest exposure to AI, reflecting the greater concentration of professional occupations in those areas. Workers in the North East are in jobs with the least exposure to AI across the UK. However, overall the variation in exposure to AI across the geographical areas is much smaller than the variation observed across occupations or industries.

- Employees with higher levels of achievement are typically in jobs more exposed to AI.For example, employees with a level 6 qualification (equivalent to a degree) are more likely to work in a job with higher exposure to AI than employees with a level 3 qualification (equivalent to A-Levels).

- Employees with qualifications in accounting and finance through Further Education or apprenticeships, and economics and mathematics through Higher Education are typically in jobs more exposed to AI. Employees with qualifications at level 3 or below in building and construction, manufacturing technologies, and transportation operations and maintenance are in jobs that are least exposed to AI.

Enough Campaign

The Government announced the next (third) phase in the Enough campaign to tackle violence and abuse against women and girls, which focuses on HE. The government describe the initiative:

30 universities across the UK are partnering to deliver bespoke campaign materials designed to reflect the scenarios and forms of abuse that students could witness. It will see the wider rollout of the STOP prompt – Say something, Tell someone, Offer support, Provide a diversion – which provides the public with multiple options for intervening if they witness abuse in public places and around universities.

Graphics on posters, digital screens and university social media accounts will encourage students to act if they witness abuse, as part of wider efforts to make university campuses safer. The latest phase of Enough also contains billboard and poster advertising on public transport networks and in sports clubs, as well as social media adverts, including on platforms relevant to younger audiences.

Home Secretary, James Cleverly said: While the government will continue to bring into force new laws to tackle these vile crimes, equip the police to bring more criminals to justice and provide victims with the support they need, the Enough campaign empowers the public to do their part to call out abuse when they see it and safely intervene when appropriate.

Baroness Newlove, Victims’ Commissioner for England and Wales said: If we are to effectively tackle violence against women and girls, this requires a whole society approach with the education sector playing a key role. I welcome the latest phase of the Enough campaign as it expands into university campuses. Government commitments to future iterations of this campaign are crucial if we are to see the wider cultural shifts we know are necessary.

Apprenticeships

FE week report that the party’s over for degree apprenticeships as Chancellor Jeremy Hunt plans to restrict use of the apprenticeship level for degree level apprenticeships. Snippets: Multiple sources have said that Jeremy Hunt is concerned about the affordability of the levy amid a huge rise in the number of costly level 6 and 7 apprenticeships for older employees, while spending on lower levels and young people falls… Treasury officials have now floated the idea of limiting the use of levy cash that can be spent on the highest-level apprenticeships, but the Department for Education is understood to be resisting… Networks of training providers and universities contacted the Treasury this week to plead with the chancellor not to cut access to the courses, who claim the move is “political posturing” to appeal to certain parts of the electorate. Those involved in delivering the courses have also argued that the majority of level 6 and 7 management apprentices are in public services and “critical for the productivity agenda and fiscal sustainability”.

Think Tank EDSK are in favour of Hunt’s approach. Wonkhe report that they are campaigning for those who have already completed a university degree should be banned from accessing levy-funded apprenticeships, the think tank EDSK has argued in a new report, which criticises the proliferation of degree apprenticeships used to send “existing staff on costly management training and professional development courses.” The report sets out recommendations for improving the skills system for those young people who choose not to study at university – another recommendation is potentially preventing employers from accessing levy funds if they train more apprentices aged above 25 than aged 16 to 24.

Moving from opinion to data:

The DfE published 2022/23 data on apprenticeships.

- Advanced apprenticeships accounted for 43.9% of starts (147,930) whilst higher apprenticeships accounted for a 33.5% of starts (112,930).

- Higher apprenticeships continue to grow in 2022/23. Higher apprenticeship starts increased by 6.2% to 112,930 compared to 106,360 in 2021/22.

- Starts at Level 6 and 7 increased by 8.2% to 46,800 in 2022/23. This represents 13.9% of all starts for 2022/23. There were 43,240 Level 6 and 7 starts in 2021/22 (12.4% of starts).

- Starts supported by Apprenticeship Service Account (ASA) levy funds accounted for 68.1% (229,720).

Wonkhe on apprenticeships:

Admissions

Recruitment

UCAS released their end of cycle data key findings. These are notable as this cycle included questions to collect information on disability and mental health conditions as well as free school meals entitlement, estrangement, caring responsibilities, parenting, and UK Armed Forces options.

- The number of accepted UK applicants sharing a disability increased to 103,000 in 2023, up from 77,000 in 2022 (+33.8%) and 58,000 in 2019 (+77.5%).

- Those sharing a mental health condition rose to 36,000 this year compared to 22,000 last year (+63.6%) and 16,000 in 2019 (+125%). (Possibly because the changes mean that fewer accepted students needed to select ‘other’ when sharing their individual circumstances – 5,460 in 2023 versus 6,700 in 2022 which is -18.5%.)

- The second highest number of UK 18-year-olds from the most disadvantaged backgrounds have secured a place at university or college this year. A total of 31,590 UK 18-year-olds from POLAR4 Quintile 1 have been accepted – down from the record of 32,415 in 2022 (-2.5%) but a significant increase on 26,535 in 2019 (+19%). …but…

- The entry rate gap between the most (POLAR 4 Quintile 1) and least disadvantaged (POLAR 4 Quintile 5) students has slightly widened to 2.16 compared to 2.09 in 2022.

- The number of accepted mature students (aged 21 and above) is down – 146,560 compared to 152,490 in 2022 (-3.9%) but an increase on 145,015 in 2019 (+1.1%).

Sander Kristel, Interim Chief Executive of UCAS,, said: Today’s figures show growing numbers of students feel comfortable in sharing a disability or mental health condition as part of their UCAS application… This forms part of our ongoing commitment to improve the admissions process, helping to ensure that all students have available support and guidance to progress to higher education, no matter their background.

Also:

- There has been a decline in the number of accepted international students – 71,570 which is a decrease from 73,820 in 2022 (-3.0%) and 76,905 in 2019 (-6.9%). We see a different trend when broken down by international students from outside the EU – with 61,055 acceptances, down from 62,455 in 2022 (-2.2%) but significantly up from 45,455 in 2019 (+34%).

- Of the 1,860 T Level applicants, 97% received at least one offer. A total of 1,435 people with an achieved T Level have been placed at higher education, up from 405 last year (+254%)

Wonkhe has other thoughts and doesn’t quite believe the rosy picture UCAS is known to paint: while a decline in acceptances for 18-year-old undergraduate students could be explained in terms of disappointing A levels or the cost of living, a two per cent decline in applications – confirmed in last week’s end of cycle data from UCAS – is rather more worrying. Coming at a time of a widely reported slowdown in international recruitment as well, the worries begin to mount up. There are blogs delving deeper:

School curriculum breadth

Lord Jo Johnson has been chairing the Lords Select Committee on Education for 11-16 Year Olds (report here) which highlights that the EBACC has led to a narrowing of the curriculum away from creative, technical and specialist interest subjects – which isn’t ideal for future HE study. The committee’s inquiry was established in response to growing concerns that the 11-16 system is moving in the wrong direction, especially in relation to meeting the needs of a future digital and green economy. Research Professional have a nice short write up on the matter in Bacc to the future. Snippets:

- “Schools have accordingly adjusted their timetables and resourcing to promote these subjects to pupils and maximise their performance against these metrics,” the Lords committee says. “As a result, subjects that fall outside the EBacc—most notably creative, technical and vocational subjects—have seen a dramatic decline in take-up.”

- The evidence we have received is compelling; change to the education system for 11-to-16-year-olds is urgently needed to address an overloaded curriculum, a disproportionate exam burden and declining opportunities to study creative and technical subjects,” Johnson said.

- It looks like more government education reform could be on the cards soon. If prime minister Rishi Sunak is returned at the next election—a big if, we appreciate—then he has post-16 reform in his sights, so we could be in for a busy time on that front.

Access & Participation

The OfS has a new approach to regulation learning lessons from the 30 (ish) HEIs that rewrote their Access and Participation plans a year early.

Wonkhe blog: John Blake deletes even more of the cheat codes to access and participation.

- I’m also pleased that many wave 1 providers have put a greater focus on evaluation: hiring evaluation specialists, training staff, developing theories of change and evaluation plans for plan activities. This is promising for the future of the evidence base of what does and does not work relating to intervention strategies. We are keen to see this focus increase further and to see more evaluation plans that explore cause and effect robustly.

- I want to see more evidence of collaboration between universities and colleges and third sector organisations, schools, and employers to address the risks to equality of opportunity that current and prospective students may face. Joining forces brings together expertise and agility and great numbers of students who can benefit from interventions.

- I also want to see more ambitious work to raise attainment of students before they reach higher education. What the EORR clearly shows is that where a student does not have equal access to developing knowledge and skills prior to university, they are more likely to experience other risks at access, throughout their course and beyond.

- We heard an understandable nervousness from providers around setting out targets and activity where the success of the activity undertaken is not necessarily entirely in their control. This was particularly in relation to collaborative partnerships and around work to raise pre-16 attainment. Whilst this is entirely understandable, I encourage providers to take calculated risks, and to know that where expected progress is not being made, we will provide you with an opportunity to explain the reasons for this, as well as your plans to get back on track, where possible. Our regulation is not designed to catch anyone out who is doing the hard work – even where that work does not always lead to the outcomes we all want.

- We do not intend to update the access and participation data dashboard prior to May 2024 at the earliest. This is to ensure clarity, and as much time as possible for providers to work on new access and participation plans in light of delays to the first Data Futures collection of student data. Providers should use the data and insights that are currently available, including through the data dashboard published earlier this year, to support them to design their plans.

International

Short version – lots of debate on international students and migration. The Government plans for them to continue to be counted in the net migration statistics and continues to be opposed to bringing dependents into the country.

Here’s the five key exchanges in which the matter was discussed in Parliament over the last few weeks.

- At Home Office oral questions (transcript) Wendy Chamberlain MP (Liberal Democrat) asked what assessment had been made of the potential merits of providing temporary visas to the dependants of visiting students and academics when the dependants are living in conflict zones. The Minister for Immigration, Rt Hon Robert Jenrick MP, said that migration should not be the first lever to pull in the event of a humanitarian crises.

Jonathan Gullis (Conservative) described the recent ONS net migration statistics as completely unacceptable. He asked whether the Minister would support the New Conservatives’ proposal to extend the closure of the student dependant route to cover those enrolled on one-year research master’s degrees. The Minister stated that the level of legal migration was far too high and outlined the recent policy related to dependants. He believed the policy would have substantive impact on the levels of net migration but added that the government were keeping all options under review and will take further action as required.

- The Commons chamber debated net migration through the urgent question route. Immigration Minister, Robert Jenrick MP, stated:

Earlier this year, we took action to tackle an unforeseen and substantial rise in the number of students bringing dependants into the UK to roughly 150,000. That means that, beginning with courses starting in January, students on taught postgraduate courses will no longer have the ability to bring dependants; only students on designated postgraduate research programmes will be able to bring dependants. That will have a tangible effect on net migration.

He went on to say (and it’s not clear if he is referring to students or net migration across all areas): It is crystal clear that we need to reduce the numbers significantly by bringing forward further measures to control and reduce the number of people coming here, and separately to stop the abuse and exploitation of our visa system by companies and individuals.

Alison Thewliss (SNP) challenged the anti-migration tone stating: I thank those people who have come to make their home here [Scotland] and to contribute to our universities, public services and health and care sector, and who have made our society and our economy all the richer for their presence. Have the Government thought this through? Who will carry out the vital tasks of those who have come to our shores if they pull up the drawbridge and send people away?

Tim Loughton highlighted that 135,000 visas were granted to dependants last year, up from 19,000 just three years ago, and around 100,000 visas were granted to Chinese students, up 87% over the past 10 years.

The Immigration Minister confirmed the government has considered a regional system of immigration but discounted it as unlikely to work in practice.

Paul Blomfield shared familiar messaging about the investment that international students bring to the UK and called for their removal from the migration statistics: International students contribute £42 billion annually to the UK. They are vital to the economies of towns and cities across the country. Most return home after their course. Those who do not are granted a visa for further study or a skilled workers visa, because we want them in the country. Students are not migrants. The public do not consider them to be migrants. Is it not time we took them out of the net migration numbers and brought our position into line with our competitors, such as the United States, whose Department of Homeland Security, as the arm of Government responsible for migration policy, does not count students in its numbers?

The Minster was unmoved, and responded: I do not think fiddling the figures is the answer to this challenge. The public want to see us delivering actual results and bringing down the numbers. Of course, universities and foreign students play an important part in the academic, cultural and economic life of the country, but it is also critical that universities are in the education business, not the migration business. I am afraid that we have seen a number of universities—perfectly legally but nonetheless abusing the visa system—promoting short courses to individuals whose primary interest is in using them as a backdoor to a life in the United Kingdom, invariably with their dependants. That is one of the reasons why we are introducing the measure to end the ability of students on short-taught courses to bring in dependants. Universities need to look to a different long-term business model, and not just rely on people coming in to do short courses, often of low academic value, where their main motivation is a life in the UK, not a first-rate education.

- Next the Lords debated net migration (end of November) – Lord Sharpe of Epsom, Home Office Minster, stated the government had introduced measures to tackle the substantial rise in students bringing dependants to the UK. Baroness Brinton flew the flag for international students stating they add £42 billion to the UK economy. She questioned why the government constantly portray them as a drain on the UK and why are they proposing to reduce their numbers, rather than recognising their direct contribution to our economy, communities and universities. The Minister replied that many students stayed in the UK after their studies and that they are remaining in the net migration statistics.

Lord Johnson asked the Minister for assurance that there was no plan to axe the graduate route for international students. The Minister replied there are no plans to affect the student graduate route. These measures are specifically targeted at dependants.

- Next up UUK summarise James Cleverly’s statement on legal migration from 4 December:

The Secretary of State confirmed that he had asked the Migration Advisory Committee (MAC) to review the Graduate route to ‘prevent abuse and protect the integrity and quality of UK’s outstanding higher education sector’. Taken together with announcements in May and those outlined below, he claimed this would result in around 300,000 fewer people coming to the UK.

Other announcements:

- End abuse of health and care visa by stopping overseas care workers from bringing family dependents.

- Increase the earning threshold for overseas workers by nearly 50% from £26,200 to £38,700.

- End the 20% going-rate salary discount for shortage occupations and replace the Shortage Occupation List with a new Immigration Salary List, which will retain a general threshold discount. The Migration Advisory Committee will review the new list against the increased salary thresholds in order to reduce the number of occupations on the list.

- Raise minimum income required for family visas to £38,700.

The Shadow Home Secretary, Rt Hon Yvette Cooper MP, said that Labour had called for (i) an end to the 20% ‘unfair discount’, (ii) increased salary thresholds to prevent exploitation, and (iii) a strengthened MAC. She proceeded to note that while the UK benefitted from international talent and students, the immigration system needed to be controlled and managed so that it was fair and effective. She criticised the government’s approach saying that there was nothing in the statement about training requirements or workforce plans.

Chris Grayling MP (Conservative) asked if there was a case for looking at who comes to study and if they should have an automatic right to work after they complete their studies. In his reply, the Home Secretary said that the UK’s university sector was a ‘global success story’ and widely respected across the world. He added that higher education should be a route to study, rather than a visa route by the back door.

Layla Moran MP (Liberal Democrat) criticised the government for ‘starving’ the science industry of lab technicians and other talent by introducing these new measures.

Patrick Grady MP (SNP) asked what steps the government was taking to negotiate more visa exchange programmes with the European Union and other countries that could allow the sharing of skills and experience across borders. The Home Secretary said he had negotiated a number of youth mobility programmes to attract the ‘brightest and the best’.

- On 5 December the Lords debated the legal migration statement. Lots of the content was similar to what we’ve already described above. Here we mention some additional points:

Lord Davies of Brixton (Labour) pointed to the impact that a fall in overseas students could have on the education provided for UK domiciled students. He urged the government to do more to encourage people to study in the UK. He warned that the measures announced would deter some international student from coming to the UK alongside proposals announced in May to ban PGT students from bringing dependants. He asked for reassurances that these factors will be considered in any impact assessments.

Baroness Bennett (Green) asked how much income was expected to be lost to UK universities in light of government predictions that 140,000 fewer people would come via student routes. She also asked about the regional impact of this.

The Minister also confirmed that the ban on dependents at Masters study level was not differentially applied based on subject. The ban applies to science students as much as humanities.

Finally, the House of Commons Library published a briefing on International students in UK higher education, the shorter summary here is a useful quick round up of the key points. The Home Office’ press release on their plan to cut net migration is here. Research Professional meander through some earlier international migration speculation (scroll to half way down if you want the more focussed content).

Recent Wonkhe coverage addresses the predicted loss in tuition fees arising from recent increases to student visa and health charges over five years could be up to £630m – a figure criticised by the House of Lords Secondary Legislation Scrutiny Committee. Reviewing the Home Office’s impact assessments for the Immigration Health Surcharge increase and the student visa charge increases, the committee argues that both should have been considered together, with the possible effects “greater than the sum of each individually.” The Home Office had informed the committee that the two impact assessments were carried out independently. Plus Wonkhe blogs:

International Students Digital Experience

Jisc published International students’ digital experience phase two: experiences and expectations. Finding:

- Most international students were positive about the use of technology enabled learning (TEL) on their course; notably, they appreciated how it gave them access to a wide range of digital resources, online libraries and recorded lectures.

- Most were using AI to support their learning and wanted more guidance on effective and appropriate practice.

- Home country civil digital infrastructure shapes digital practice, which in turn forms the basis of assumptions about how digital will be accessed and used in the UK

- International students often struggled with practical issues relating to digital technologies, including setting up authentication and accessing university systems outside the UK

There is a shorter summary and some key information here.

Inquiries and Consultations

Click here to view the updated inquiries and consultation tracker. Email us on policy@bournemouth.ac.uk if you’d like to contribute to any of the current consultations.

New consultations and inquiries:

The DfE has published a consultation on minimum service levels (MSLs) in education which sets out regulations the government may implement on strike action days to require a minimum educational delivery to be maintained (including within universities). If introduced, regulations would be brought forward under the powers provided to the Secretary of State in the Strikes (Minimum Service Levels) Act 2023.

The government states your feedback will help to inform the design of a minimum service level in schools, colleges and universities.

Minister Keegan’s ministerial statement launching the consultation is here and the consultation document is here, the response window closes on 30 January 2024. Please get in touch with Jane Forster if you wish to discuss this consultation or make a response.

Wonkhe even published a blog on the new consultation.

Other news

TEF: The remaining 53 TEF judgements for providers appealing their original results are expected to be published soon. Wonkhe got excited as the qualitative submissions, student submissions, and panel commentaries were published: Our initial analysis suggests that consistency across subject and student type, along with demonstrable responsiveness to feedback from students, have been key to securing positive judgements on the qualitative side of the exercise. They have three new blogs:

Growth contributor: A quick read from Research Professional – Andrew Westwood argues that the quietly interventionist autumn statement overlooked universities’ role in growth.

Cyber: From Wonkhe – David Kernohan talks to the KPMG team you call when your systems have been attacked and your data is at risk.

LLE: Wonkhe – New polling shows that demand for lifelong learning entitlement fee loans is not where the government may hope. Patrick Thomson tells us more. Also:

2023: The year in review – read HEPI’s annual take on the state of higher education.

Subscribe!

To subscribe to the weekly policy update simply email policy@bournemouth.ac.uk. A BU email address is required to subscribe.

External readers: Thank you to our external readers who enjoy our policy updates. Not all our content is accessible to external readers, but you can continue to read our updates which omit the restricted content on the policy pages of the BU Research Blog – here’s the link.

Did you know? You can catch up on previous versions of the policy update on BU’s intranet pages here. Some links require access to a BU account- BU staff not able to click through to an external link should contact eresourceshelp@bournemouth.ac.uk for further assistance.

JANE FORSTER

VC’s Policy Advisor

Follow: @PolicyBU on Twitter

We are currently preparing submissions to

We are currently preparing submissions to

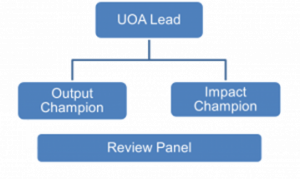

Would you like to play a key role in supporting preparations for the Engagement & Impact element of BU’s REF2029 submission?

Would you like to play a key role in supporting preparations for the Engagement & Impact element of BU’s REF2029 submission?

Nepal Study Days 2024

Nepal Study Days 2024 We can help promote your public engagement event or activity

We can help promote your public engagement event or activity Funded Public Engagement Opportunity – ESRC Festival of Social Science 2024 -Deadline for Applications Thursday 16 May

Funded Public Engagement Opportunity – ESRC Festival of Social Science 2024 -Deadline for Applications Thursday 16 May 1 WEEK REMAINING- Postgraduate Research Experience Survey (PRES) 2024

1 WEEK REMAINING- Postgraduate Research Experience Survey (PRES) 2024 Conversation article: How 2-Tone brought new ideas about race and culture to young people beyond the inner cities

Conversation article: How 2-Tone brought new ideas about race and culture to young people beyond the inner cities MSCA Postdoctoral Fellowships 2024

MSCA Postdoctoral Fellowships 2024 Horizon Europe News – December 2023

Horizon Europe News – December 2023